In the world of Kubernetes, networking is an important topic. It’s not just because applications running in Kubernetes need access to resources outside the cluster to operate, but also because Kubernetes networking gives you flexibility and helps drive the adoption of loosely coupled service architectures.

In this article, we’ll introduce you to the basics of Kubernetes networking. We’ll talk about how loosely coupled services can work together to create large, advanced applications, how these same services can communicate with external services, and how external services can communicate with our services.

Basic networking terminology

Before we can start talking about Kubernetes, we need to define a few networking terms. In nearly all networked systems, there are a few fundamental ways in which one system talks to another system.

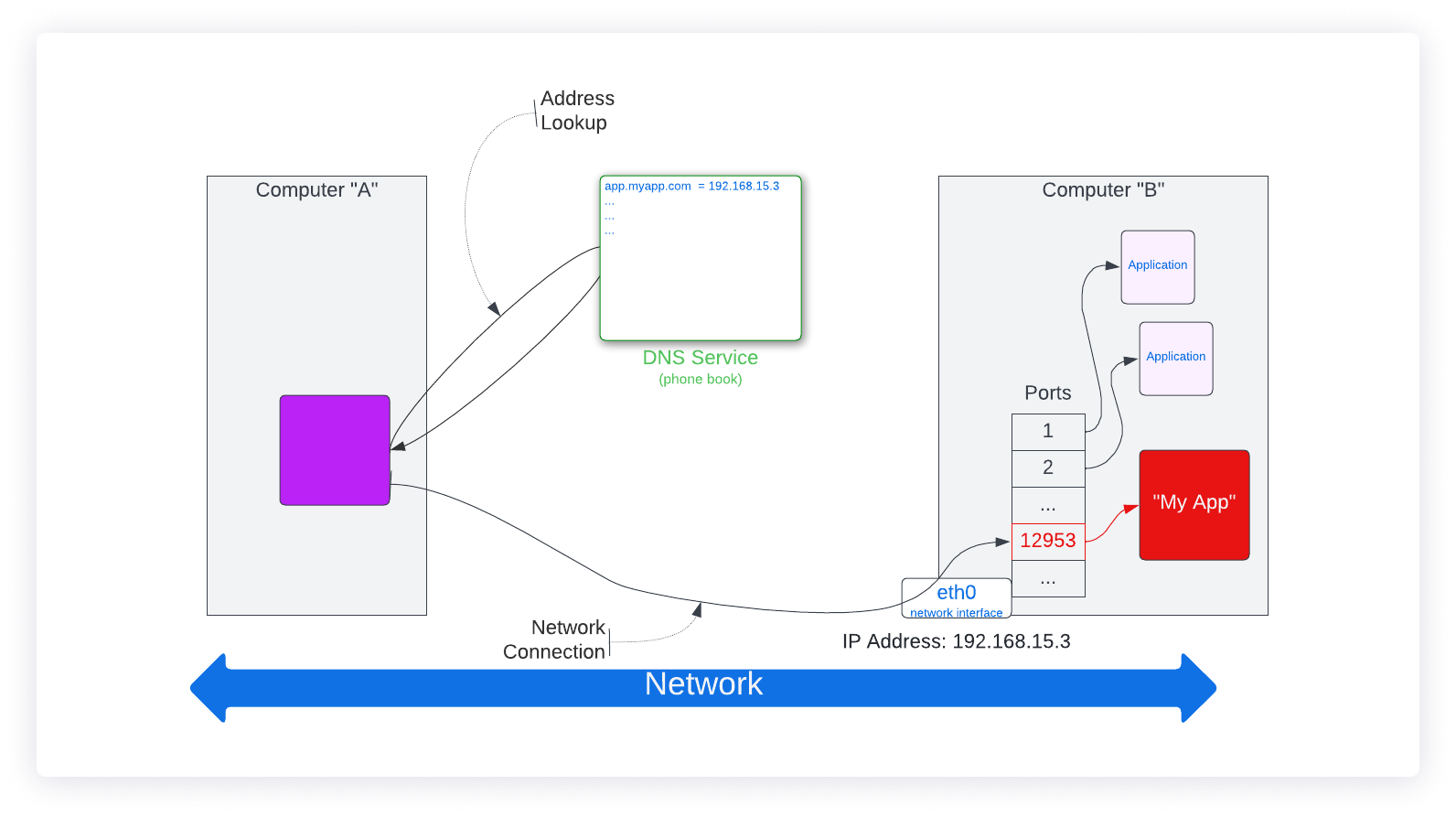

In Figure 1, Computer “A” on the left is talking to an application in Computer “B” on the right. In order for that communication to occur, several things must happen.

First, Computer “A” needs to find where the application is located, using two pieces of information:

- Hostname. This is the unique name given to the Computer “B.” In this case, we are using app.myapp.com.

- Port number. This is a unique address on Computer “B” that specifies which application to talk to on that computer. In this example, the port number is “12953.”

To locate the desired application, Computer “A” takes the hostname and performs a lookup to determine the computer’s IP address. The IP address is the address that the underlying network protocols use to actually locate Computer “B.” It does this by contacting a service called DNS, or Domain Name System. The DNS service performs a lookup to map the hostname to the computer’s IP address. This is very analogous to how a phone book is used to find a person’s name and phone number.

Once Computer “A” knows the IP address of Computer “B,” it can send a message to “B” by sending it to the IP address. This IP address is associated with a particular network interface on computer “B.” The message is sent to a particular port number on Computer “B,” which allows the message to be sent to the desired application. Each application on the computer is assigned a network interface/port number pair, so all messages arriving on a particular network interface (specified by the IP address) and a given port number will be routed to the desired application.

Many port numbers are standardized across common applications so that it’s easier to find the desired application. For example, port 80 is for the “HTTP service,” which processes web page requests. Port 21 is for the “FTP service” for file transfer. Port 25 is a standard port for the “SMTP service,” used to send emails.

A computer can have multiple network interfaces (for instance, a cell phone typically has one for WiFi networking and one for cellular networking). They also can have one or more virtual network interfaces. This allows a single computer to have many IP addresses associated with it.

This is the basis for all networking between applications and between users and applications on the internet today.

Basic Kubernetes cluster

Things get a bit more involved when you are talking about Kubernetes clusters. Let’s take a look at the basic building blocks of a Kubernetes cluster.

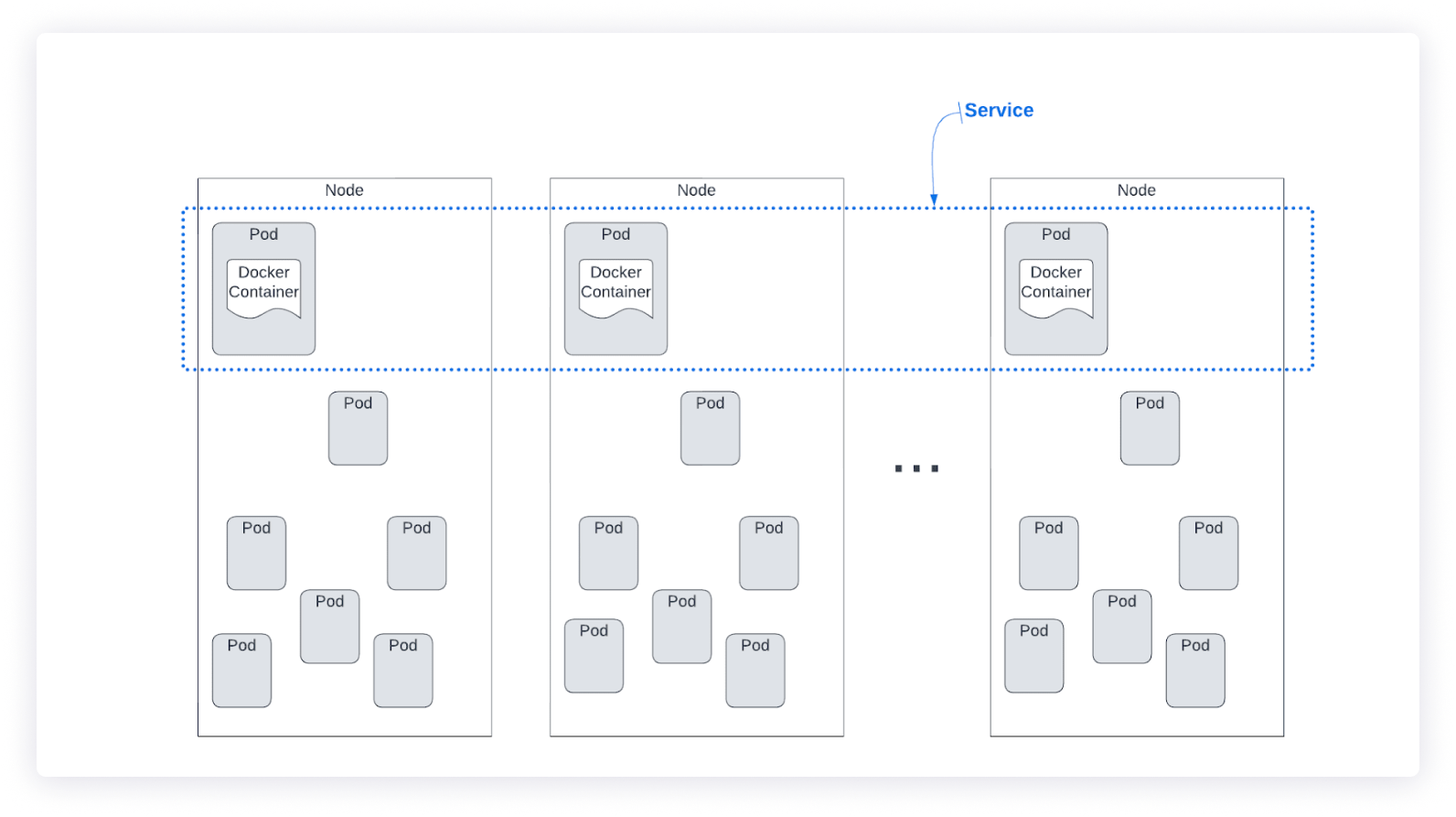

Our applications and application services run in containers, such as those created using Docker. These containers run in a Kubernetes object known as a pod. A pod is the smallest addressable component of a Kubernetes system. A single pod contains a single container (although it can contain more than one container working together) and performs the work of a single service instance.

An application typically does not have a single instance of a particular service running, it will have many instances to distribute the workload and provide a higher level of availability. In a Kubernetes system, this means there will typically be many instances of a pod running simultaneously for the same application. These pods, taken together, form a Kubernetes service. A Kubernetes service is a group of deployed pods running together, all performing the same function. A service will distribute the workload it has to perform across all of the pods in the service.

Each pod in each service needs to run on some form of computer instance. In Kubernetes, these computer instances are called nodes. A Kubernetes node may be a single server or some form of virtualized server instance. Typically, the nodes of a single service are distributed across multiple nodes, to increase availability. That way if a node crashes—and the pods on that node terminate—the service can still recover by using other pods on other nodes to handle the workload.

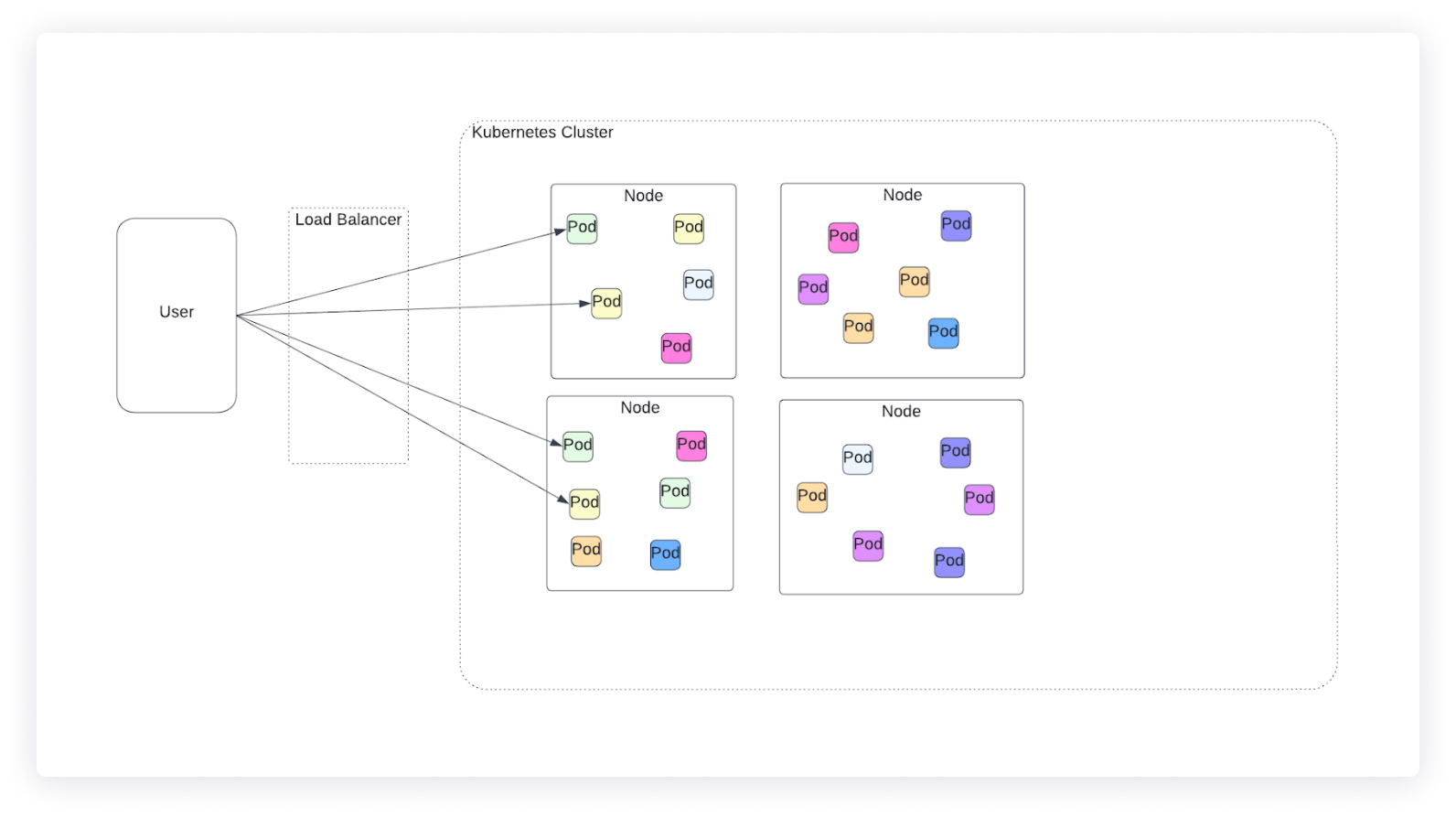

Together, all the nodes running all the pods in a Kubernetes system create a Kubernetes cluster. Figure 2 shows the components that make up a single cluster.

Talking between pods

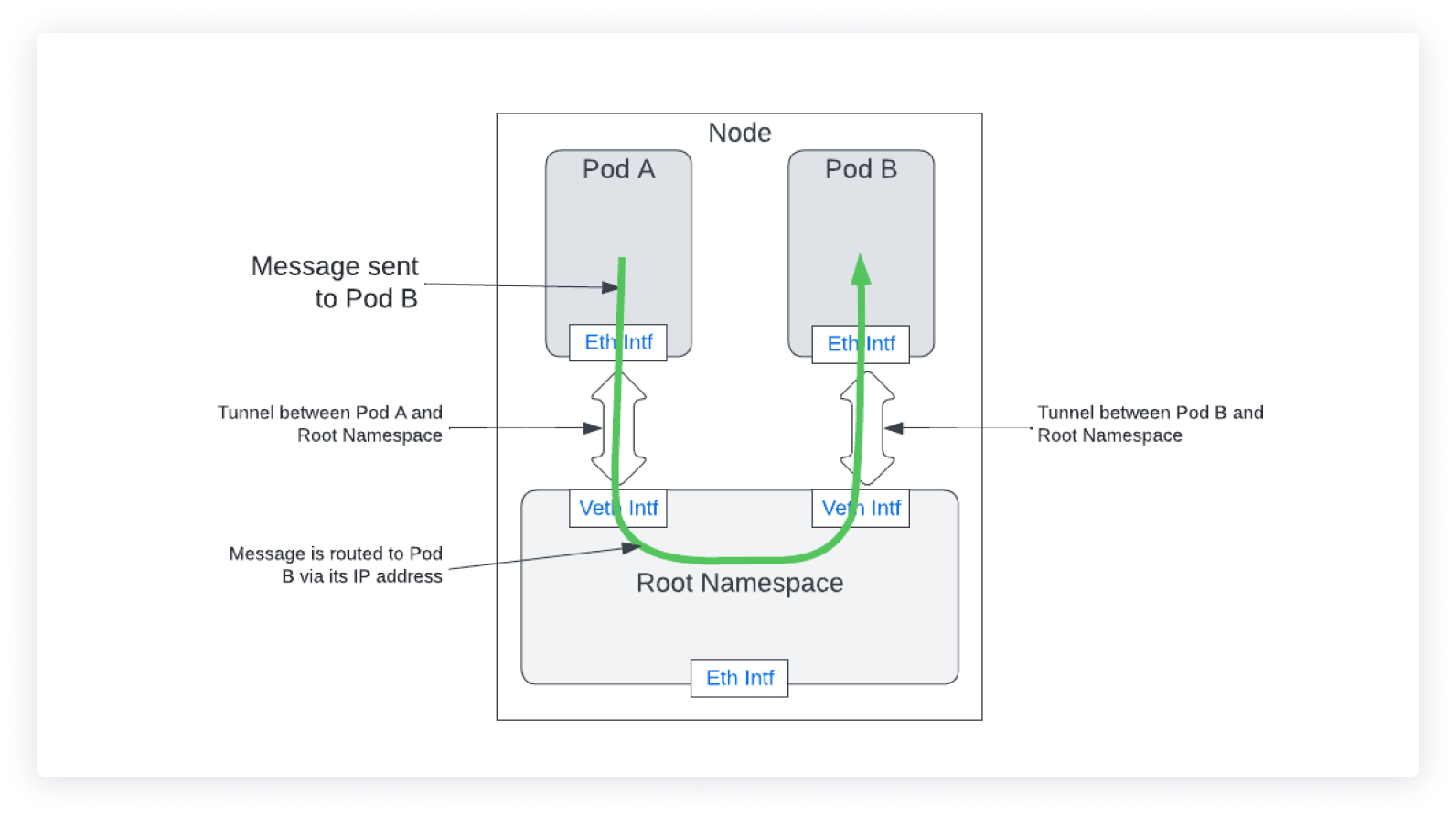

We mentioned network interfaces previously, but one aspect of network interfaces is that they allow a single computer to split processes into multiple network namespaces, each assigned to a different ethernet interface. The namespaces typically are isolated and can’t talk to each other without going through the ethernet interface. In a Kubernetes cluster, each pod is given a unique namespace, so in order to talk to the code running in the pod, you need to talk to it through the assigned ethernet interface.

The node itself also has a namespace, called the root namespace. The node sets up a network tunnel between each pod and the root namespace. Because all pods have a tunnel to the root namespace, pods can talk to each other by sending messages to the root namespace through its tunnel, then to the other pod via its tunnel. This is illustrated in Figure 3.

The root namespace gives each pod a unique IP address (actually, a unique range of IP addresses, called a CIDR block). That way, messages sent from one pod are routed by the root namespace to the correct pod by using the destination IP address of the message. This is exactly the same way that messages are sent in regular networking from one computer to another. In this case, the messages go from one pod to another pod.

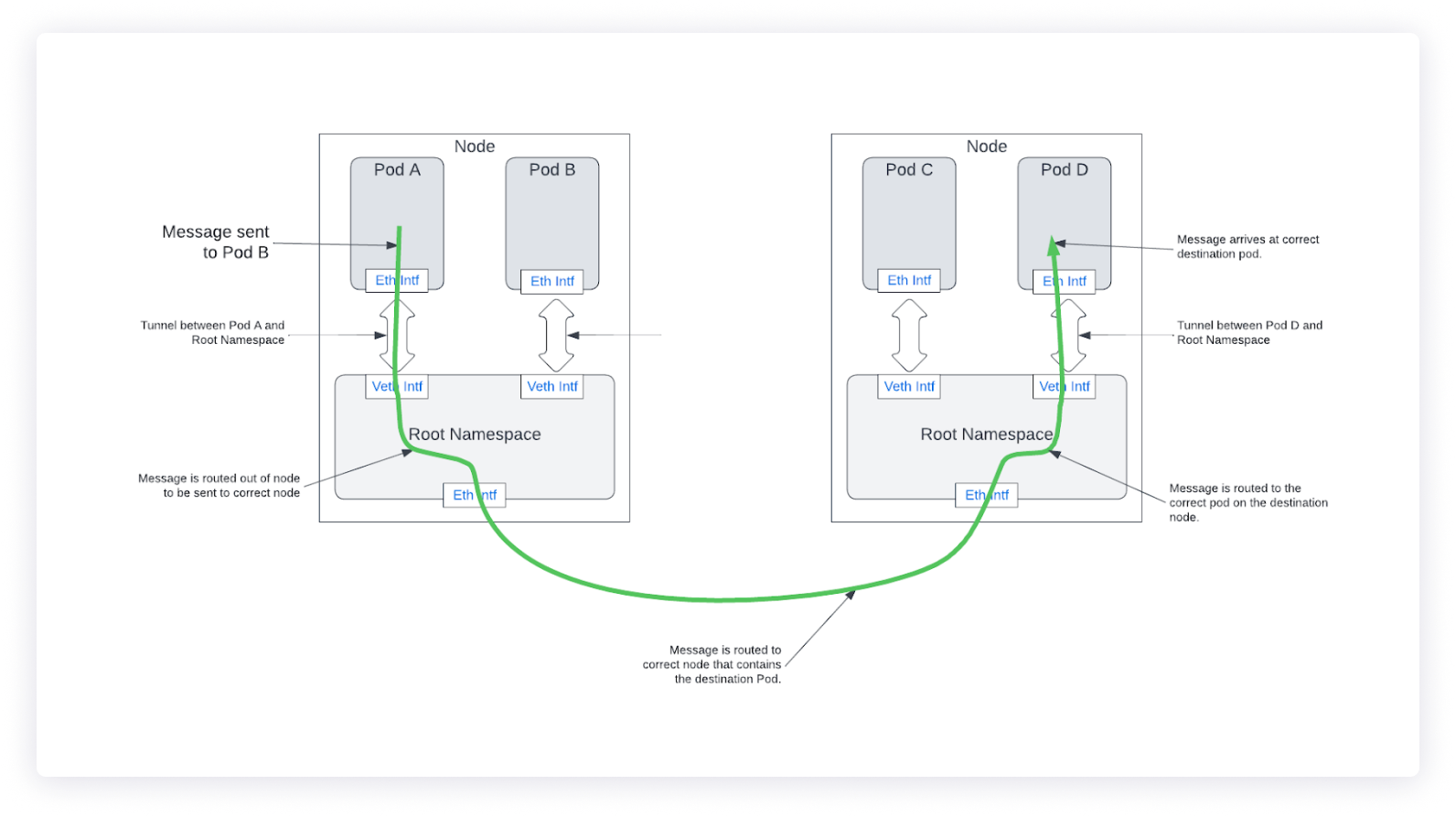

This same model works for communications between two pods on the same node and between two pods on different nodes. In the different node case, when a message is sent to an IP address representing a pod on a different node, the root namespace sends the message over the normal network connecting the nodes together to the node that has the destination pod. The message is sent to the root namespace of that destination node, which routes the message to the correct pod. This is illustrated in Figure 4.

An interesting question that comes up is, “How does the root namespace know which node to send a message to?” Each node may have hundreds of pods, each with one or more unique IP addresses (CIDR blocks), and there can be hundreds of nodes within a given Kubernetes cluster. How does any given node know where to send a message to reach the right pod?

Kubernetes makes sure each and every pod in the cluster has a unique set of IP addresses, so each pod is uniquely identifiable. Then, a standard network protocol known as ARP is used to broadcast the IP addresses and how to get to them to each node in the system. This protocol creates a map, or route, from any given node to any given IP address in any other node. This protocol dynamically creates this map and it is automatically updated continuously because the exact makeup of pods in the system can change often.

Incidentally, the ARP protocol is the same protocol used in normal host-based networking. Traditional networking raises the same question, “How do you know where to send a message that is destined for a given IP address?” The same ARP protocol, along with other standard routing protocols and default routes, automatically and dynamically figures out how any given computer on the internet can talk to any other computer on the internet. It’s dynamic, fast, scalable to an extremely large number of hosts, and extremely stable. It’s been powering the internet since the 1960s.

Using services to communicate

Even though each pod has an IP address that allows it to be directly addressable, there is one major problem with this model. Pods tend to come and go. If a pod has a problem, it’s terminated and a new pod is started. If traffic increases to a service and more instances are needed to handle the traffic, new pods are created. Essentially, pods are ephemeral, and as such, so are their IP addresses. How can you reliably send a message to a pod if the IP addresses for the pods keep changing?

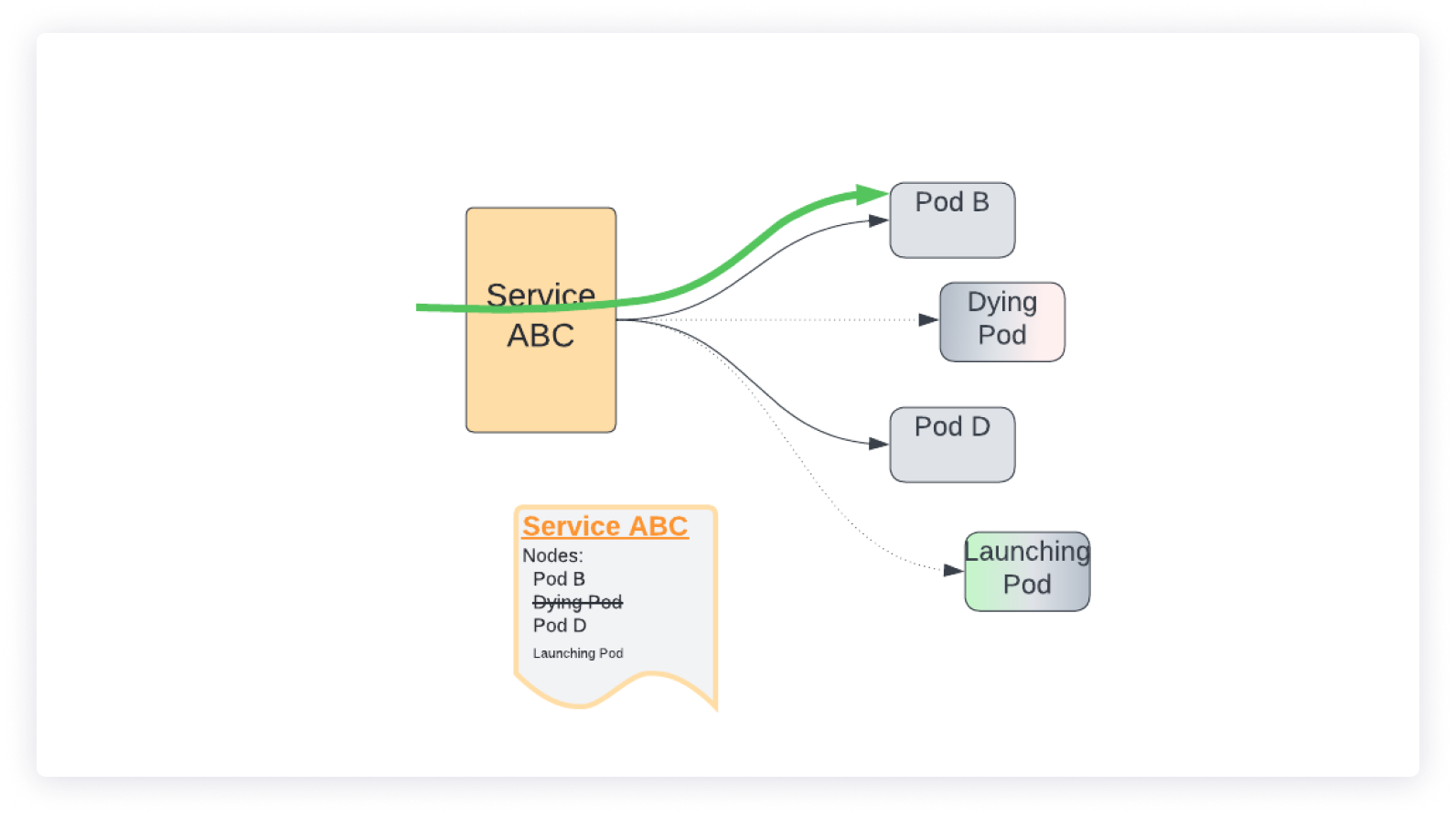

The answer to that problem is the service. A Kubernetes service provides a front end to a series of pods that are performing the same capabilities. All pods in a system that perform the same functions are part of the same service. As pods are launched and terminated, they are added and removed to the corresponding service, so the service always has a list of valid pods and their IP address.

Now, if you need the capabilities of a pod, rather than send a message directly to the pod, you send the message to the service. The service then forwards the request to one of the pods that it knows is active. The service can load balance the requests it receives across a series of pods that are performing the same capability, even if those pods are on different nodes.

The service has a fixed, assigned IP address associated with it. This IP address typically never changes, so anyone who wishes to engage a pod to perform some action, can talk to the service at a well-known IP address and not worry about the details regarding which pod is implementing the request. Figure 5 shows a service named “ABC” and the pods it is connected to. One pod is dying in the diagram and a new pod is being created. The service maintains the list of pods that are currently active and makes sure requests are forwarded to an appropriate active pod.

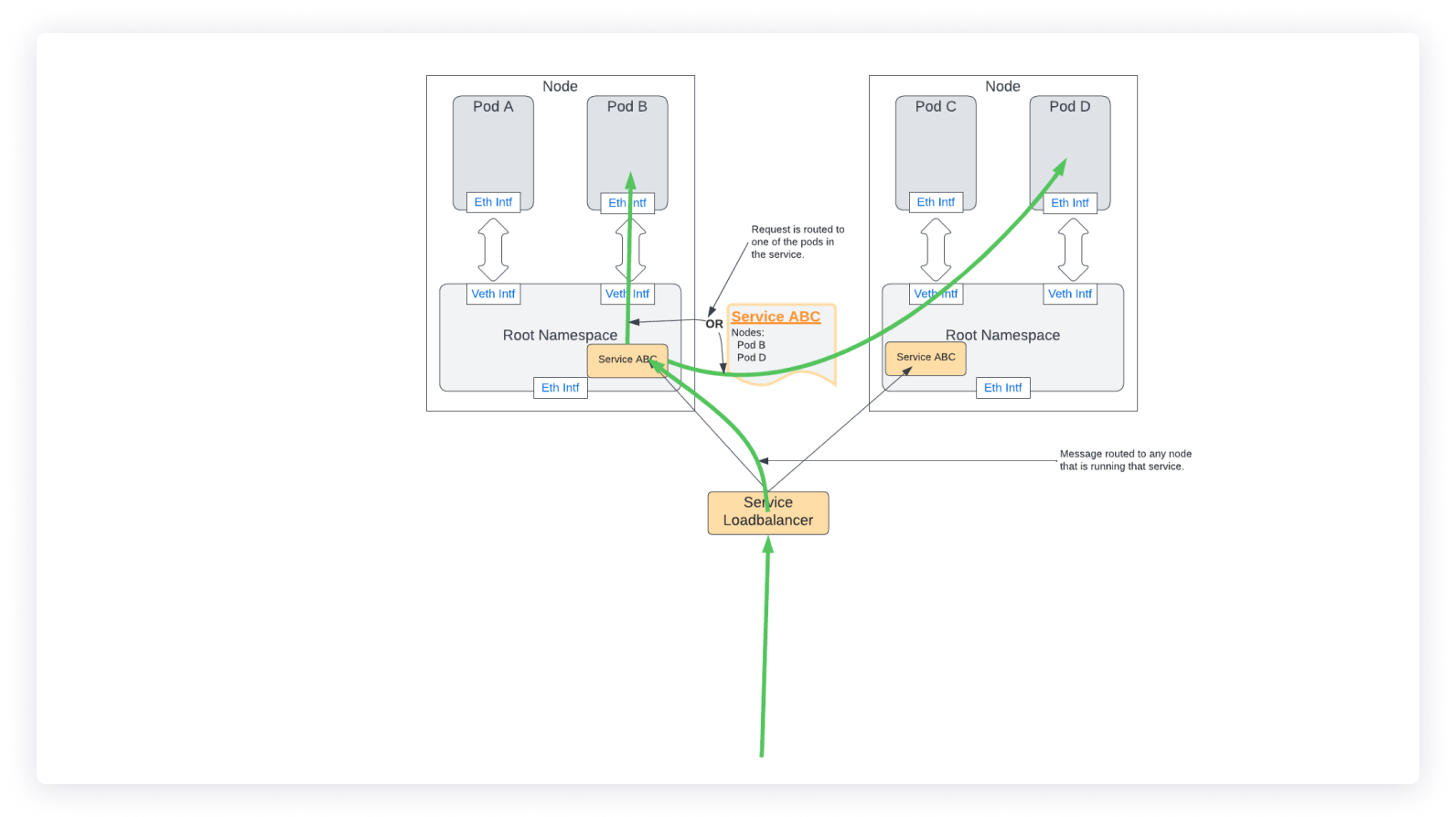

Pod to service

The network message routing for one pod talking to another is slightly different when services are involved. As shown in Figure 6, Pod A sends a message to Service ABC. This service, in the root namespace of the node, determines which pod to send the request to. It then updates the IP address of the message to contain the correct IP address of the chosen pod and forwards the request to that pod (Pod D in this example). The routing for the message from the service to the pod is identical as previously shown in Figure 4. If a pod is terminated, and a new one takes its place, the new pod will receive requests sent to the service in place of the old pod. The service to pod mapping is completely dynamic.

External Internet to service

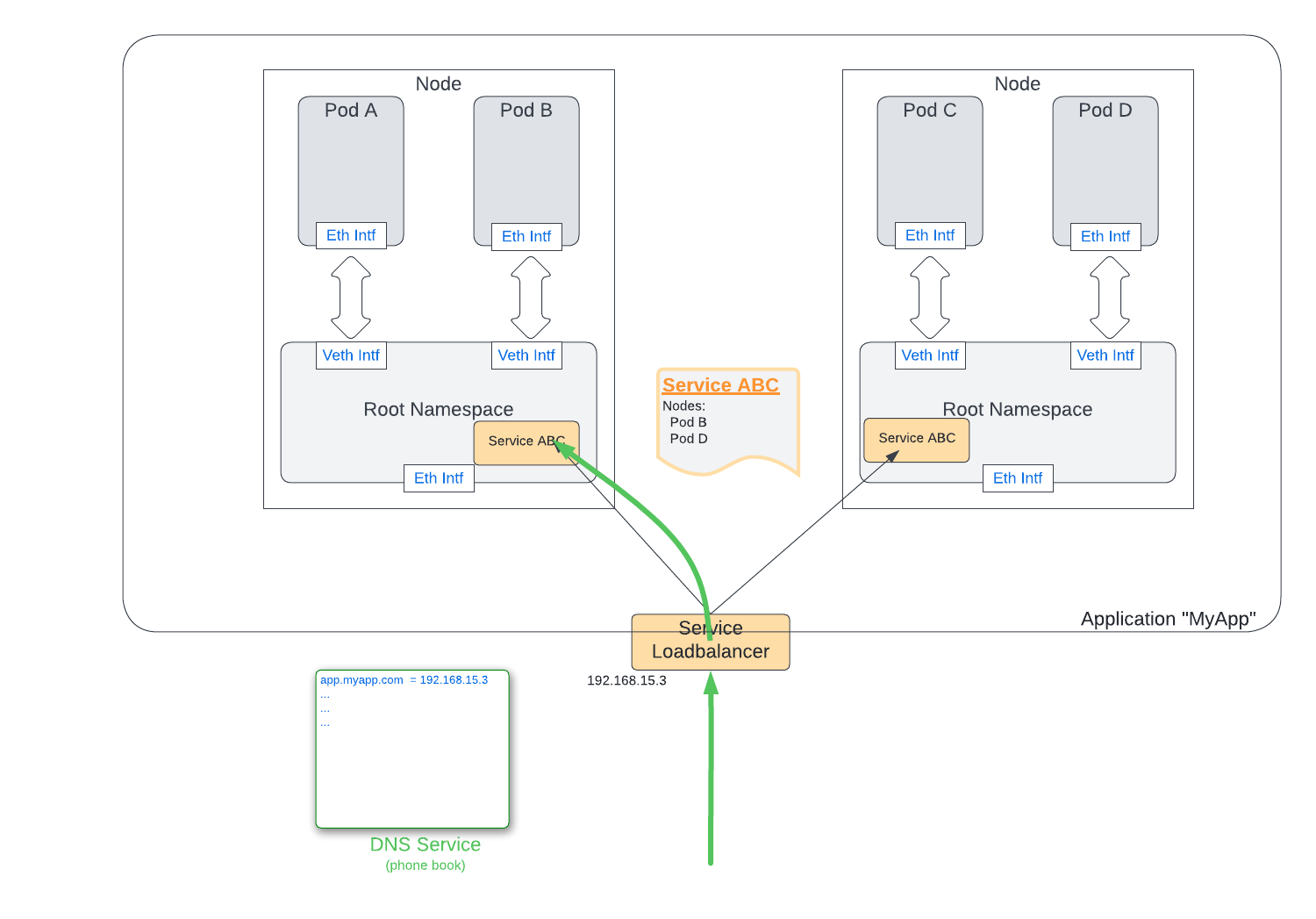

Services allow the external internet to easily access the capabilities of the pods within the cluster without knowing any of the details of how the pods are laid out in the cluster. When a service is created that is designed to accept traffic from the external internet, a load balancer is attached to the front end of the system facing the internet. It routes traffic to the nodes that contain the service. The service then picks an appropriate node to send the message to. As such, a request from the external internet can be routed to a particular pod without the requestor knowing the address of the pod. They only know the IP address of the service load balancer that is fronting this request.

Figure 7 shows the routing of a message from the external internet (bottom of image) through the service load balancer, to the service on a node, and on to the destination pod.

DNS and Kubernetes services

Now that the service has an externally visible IP address to receive traffic, we can put this address into DNS, so that we can refer to the service by its hostname, rather than its IP address. Figure 8 shows the hostname “app.myapp.com” as it maps the request to the IP address of the service load balancer that handles this service.

Inter-cluster communications

Communicating between pods in two different clusters is handled just like communications to the external internet. The pod sending the request looks up the hostname in DNS and gets the IP address of the service load balancer. The load balancer forwards the request to one of the nodes within the destination cluster. This node forwards the request to an appropriate pod within the destination cluster.

Large service-based applications

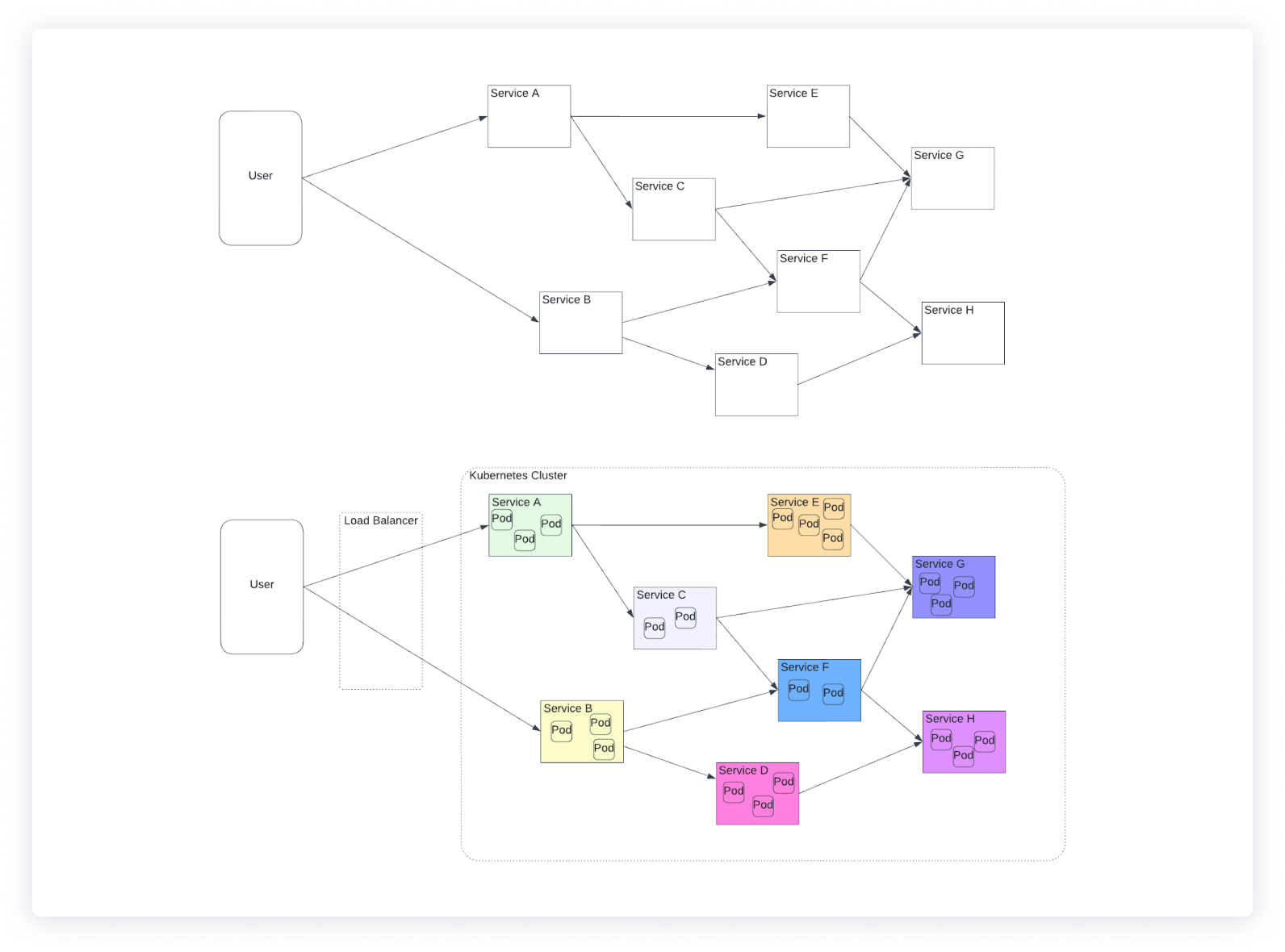

Building a large service-based application using Kubernetes is an exercise in creating multiple Kubernetes services, with each service operating multiple pods. The pods are spread among multiple nodes to provide appropriate load balancing and service-level availability guarantees.

Figure 9 shows a typical service-oriented application (top), and how Kubernetes services and pods map onto that application.

These nodes are distributed across multiple nodes within the cluster, with the pods for a given service spread across multiple nodes for availability purposes. Figure 10 shows the application from the perspective of pods running on nodes.

With this sort of application distribution, it is easy to imagine why pod-to-pod communications, via services, across node boundaries is so essential. Kubernetes provides the networking infrastructure for complex service-oriented architectures to exist and thrive in a Kubernetes cluster environment.

Final thoughts

This was a simple overview of how networking works within a Kubernetes cluster. In general, Kubernetes is very flexible in how it allows communication between pods within the cluster, as well as how it communicates with the internet. While the details can get quite technical, the high-level overview is quite straightforward. Kubernetes networking provides a sophisticated, flexible model for networking within a distributed containerized environment.

If you want to use a third-party platform to build a monitoring dashboard for your Kubernetes clusters, then consider using Airplane. Airplane is the developer platform for building internal tools. The basic building blocks of Airplane are Tasks, which are single or multi-step operations that anyone can use, and Views, which is a React-based platform for quickly building UIs. You can utilize Airplane's pre-built component library and template library to customize your monitoring dashboard.

To build this dashboard in Airplane efficiently, sign up for a free account or book a demo.