Due to its elemental design, the distributed architecture of a Kubernetes cluster relies on multiple instances of services that introduce complexities in the absence of diligent load distribution. Load balancers are services that distribute incoming traffic across a pool of hosts to ensure optimum workloads and high availability.

A load balancer service acts as a traffic controller, routing client requests to the nodes capable of serving them quickly and efficiently. When one host goes down, the load balancer redistributes its workload among the remaining nodes. On the other hand, when a new node joins a cluster, the service automatically starts sending requests to PODs attached to it.

In Kubernetes clusters, a Load Balancer service performs the following tasks:

- Distributing network loads and service requests efficiently across multiple instances

- Enabling autoscaling in response to demand changes

- Ensuring High Availability by sending workloads to healthy PODs

Types of load balancers available in Kubernetes

In Kubernetes, there are two types of Load Balancers:

- Internal Load Balancers - these enable routing across containers within the same Virtual Private Cloud while enabling service discovery and in-cluster load balancing.

- External Load Balancers - these are used to direct external HTTP requests into a cluster. An external load balancer in Kubernetes gives the cluster an IP address that will route internet traffic to specific nodes identified by ports.

Load balancer - service architecture

The load balancer service exposes cluster services to the internet or other containers within the cluster. Routing traffic among different nodes helps eliminate a single point of failure. The load balancer also checks for node health and ensures only healthy PODs handle application workloads. The architecture of the service depends on whether it is internal or external.

Internal routing

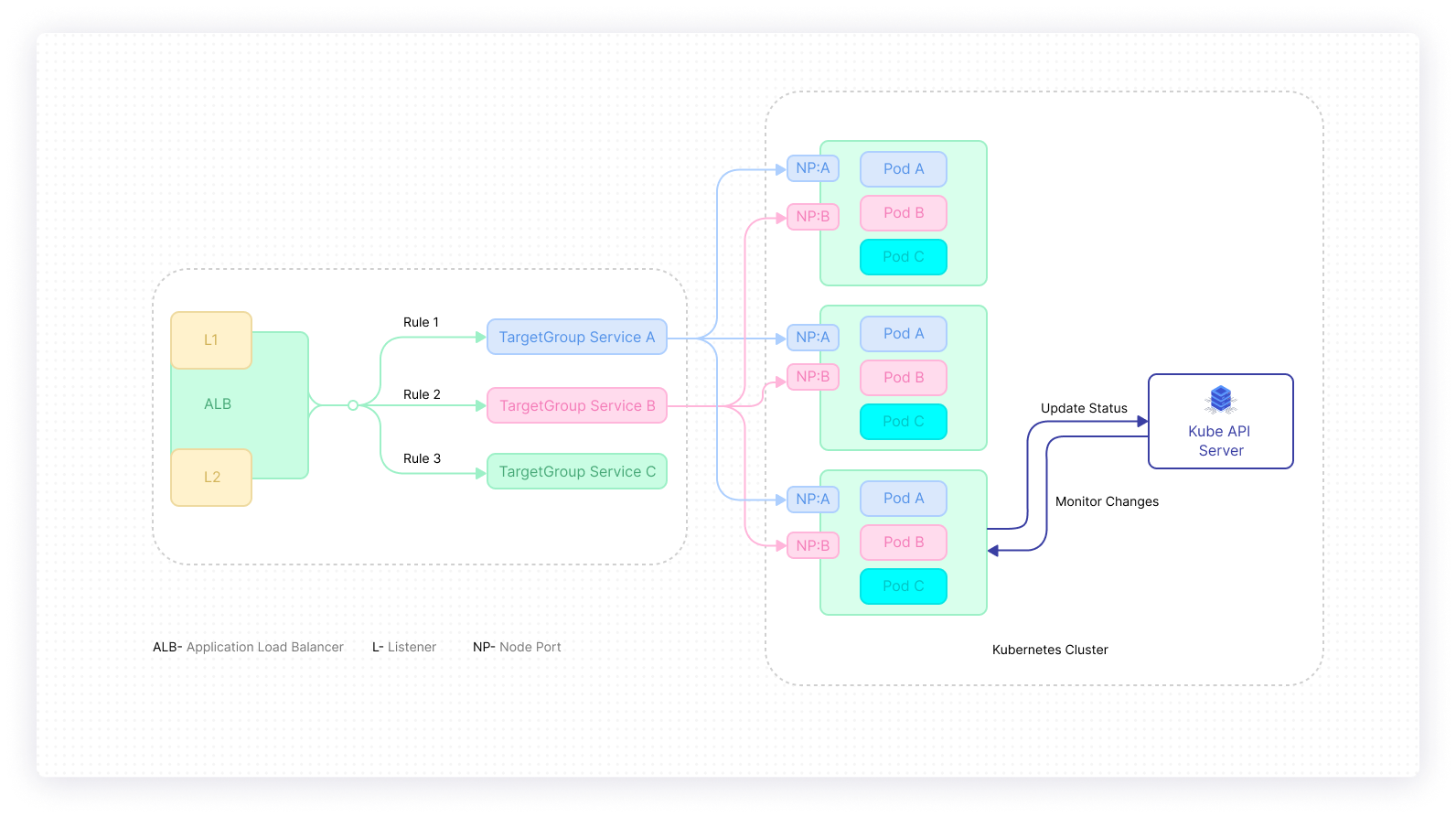

The internal load balancer could either use an HTTP, TCP/IP, or UDP listeners to perform load balancing in the application layer. In such an architecture, the cluster’s services are identified by Target Groups. The listeners connect with the target groups through set rules. These rules are then applied to specific PODs running in nodes using Node Ports. The API Server watches POD changes and updates their status using the application load balancer, typically specified in an Ingress Controller, or as a LoadBalancer service.

Internal-external routing separation

To expose a service endpoint externally via HTTPS, an external load balancer is implemented on the front-end, so that services can be accessed via IP Address. External Load Balancers (ELB) abstract Kubernetes cluster resources from external internet traffic. An external load balancer works to extend the functionality of the Internal ClusterIP service by using an IP address assigned by a cloud provider to create entries for Kubernetes containers. Kubernetes populates this IP address with the service object, following which the service controller performs health checks and establishes firewall rules where necessary.

The external balancer is defined using the following resources:

- An External Forwarding Rule that specifies the HTTPS Proxy, Ports, and IP address that users will need to connect to the service

- An HTTPS Proxy that uses the input URL to validate client requests

- A URL Map to help the HTTPS Proxy route requests to specific backends and servers

- A backend service that identifies healthy servers and distributes requests

- One or more backends connected to the backend service

- A Global Health Check rule that consistently checks whether servers are ready

- A firewall that only accepts servers and backends that have passed the health checks.

External load balancers have slight variations in configuration depending on the cloud provider offerings.

Adding a load balancer to a Kubernetes cluster

There are two ways to provision a load balancer to a Kubernetes cluster:

Using a configuration file

The load balancer is provisioned by setting the type field on the service configuration file to LoadBalancer. This load balancer is managed and guided by the cloud service provider and directs traffic to back-end PODs. The service configuration file should look similar to:

Different cloud providers may allow users to assign an IP to the Load Balancer. This can be configured with the user-specified loadBalancerIP tag. An ephemeral IP address is assigned to this load balancer if the user does not specify one. If the user specifies one that the cloud provider does not support, the IP address is ignored. If the user wishes to include further information into the load balancer service, it should be included in the .status.loadBalancer field. For instance, to set the Ingress IP Address:

Using Kubectl

It is also possible to create a load balancer using the kubectl expose command by specifying the flag --type=loadBalancer:

The command above creates a new service named darwin and connects the POD named darwin to port 9376.

This post was intended to provide an in-depth insight into Kubernetes load balancing, including its architecture and various provisioning methods for a Kubernetes cluster.

As one of the core tasks of a Kubernetes administrator, load balancing is critical in maintaining an efficient cluster. With an optimally provisioned Load Balancer, tasks can be efficiently scheduled across cluster PODs and nodes, ensuring High Availability, Quick Recovery, and Low Latency for containerized applications running on Kubernetes.

If you're looking for an internal tooling platform to build a dashboard for monitoring your applications, then Airplane is a good solution for you. With Airplane, you can transform scripts, queries, APIs, and more into powerful workflows and UIs. Use Airplane Views, a React-based platform, to quickly build a monitoring dashboard.

To test it out, sign up for a free account or book a demo.