Many Airplane users run tasks integrated with their production systems, and the overwhelming majority of users run their production systems on AWS. In this post, we'll walk through how you can easily integrate the two together!

Via Self-Hosted AWS Agents

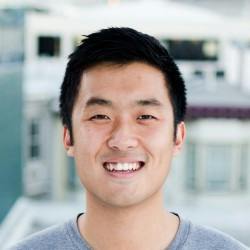

With Airplane, you can self-host agents to run within your own AWS account / AWS VPC. This gives you the most flexibility: Airplane agents can run within your own private network(s), and you can attach custom IAM policies to access private AWS resources (e.g. S3 buckets).

The agent is launched as a set of EC2 instances. You can specify the subnet to use (which determines the VPC) and the IAM policies to attach.

For example, when using the Terraform module, you can specify vpc_subnet_ids and managed_policy_arns:

When Node or Python tasks run on these agents, the standard AWS SDK will automatically pick up the instance role for authentication.

Via AWS API Key

Alternatively, if you'd like to use Airplane-hosted agents, you can set the standard AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and (optional) AWS_DEFAULT_REGION environment variables to configure access to AWS APIs.

- Generate an AWS API key like you would normally (e.g. via the AWS console). This gives you two values, the "key ID" and the "access key."

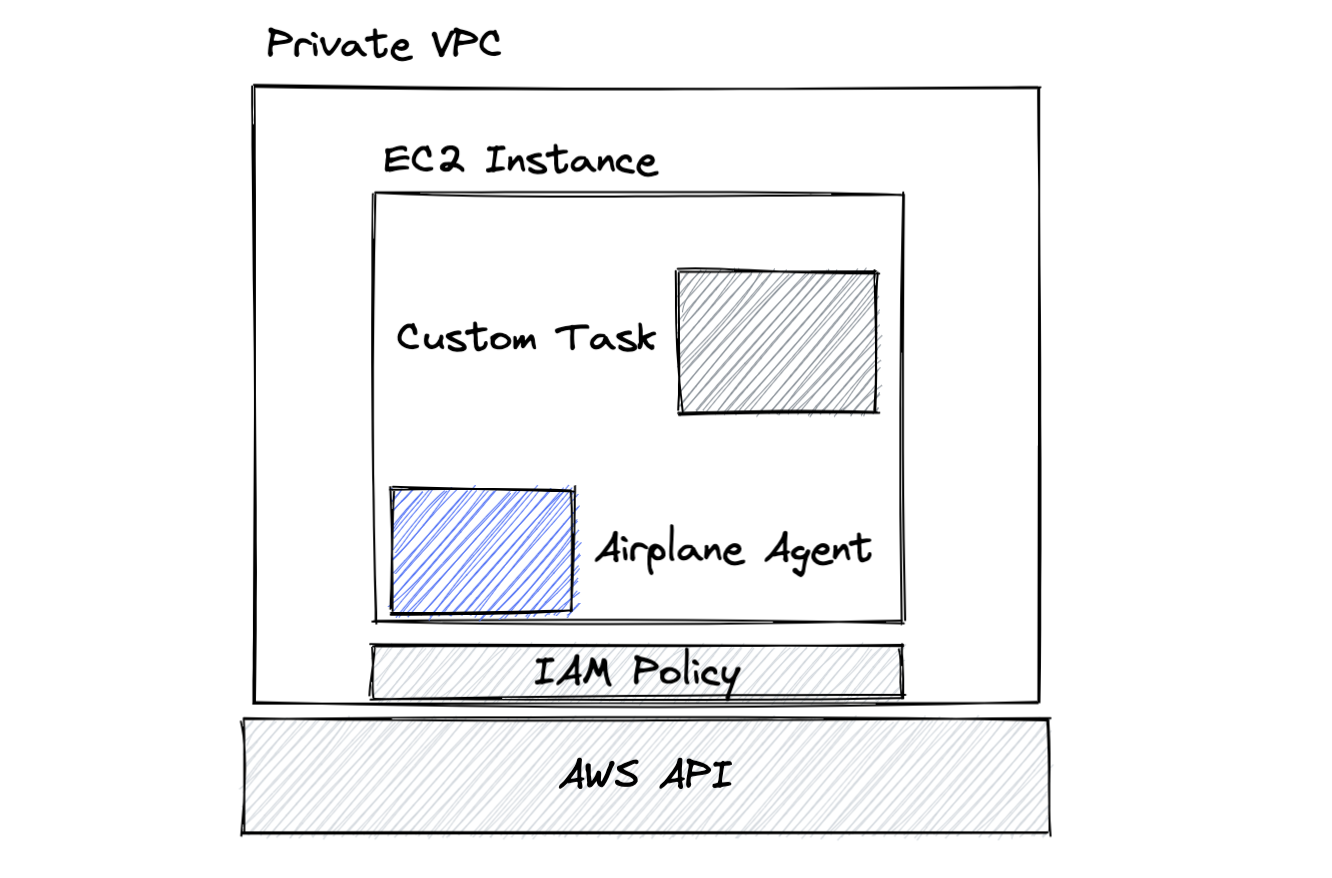

- Configure Config Variables in Airplane to store your

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEYsecrets, and attach them to your task as environment variables of the same name. - From your task code, you can now use the standard AWS SDKs which will pick up the environment variables when authenticating.

Amazon ECR: Private Images

Docker image tasks can make use of private ECR-hosted images. (Note the above is only available for self-hosted agents, and you only need this if you're using your own Docker images.)

- Make sure your agent has an appropriate IAM policy attached. You can use the built-in ECR read-only policy

arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly, or you can create your own—make sure you allowecr:BatchGetImageandecr:GetDownloadUrlForLayeractions. - When the agent prepares to run your Docker task, it will automatically detect ECR images and attempt to use the agent's IAM profile to generate and utilize ECR credentials.

Using AWS Services

Once you've set up authentication, you can connect to AWS services exactly the same as you would in other code!

For example, you can use boto3 in Python:

The exact services you might want to reach will depend on your use case, but below are some common scenarios.

Parameter Store: Secrets

If you already have secrets like database passwords stored in parameter store or secrets manager, you can connect to parameter store and read them in when you task starts running:

S3: Object Storage

S3 can be used like normal—as such, there's a broad set of use cases possible. For example:

- Store large binary files as the output from your task to an S3 bucket. Your task can output to Airplane the name of the file ("Data written to s3://my-bucket/my-key") for users to reference.

- Store sensitive secrets and configuration in a locked down bucket, and read them into your task at runtime.

RDS: Database

There's nothing special about connecting to RDS! Just like any other app, you can set a DATABASE_URL config variable and set that as an environment variable in your task.

And if you're using e.g. SQLAlchemy in Python, you can read it from the environment:

Wrap up

In conclusion, the main thing you need to do is figure out how you'll authenticate to AWS from an Airplane task (either via self-hosted agents or via API key). Once you've configured authentication, you can use standard AWS SDKs to connect to various services exactly the same as you normally would.