Kubernetes is a leading orchestration system for containerized applications, offering DevOps professionals varying degrees of control over their deployments. While many higher-level configurations are possible within Kubernetes, deeper control is often necessary to ensure ideal backend performance. DaemonSets allow admins to transcend some fundamental limitations of the Kubernetes scheduler.

Following is an introduction to Kubernetes DaemonSets—including their benefits, use cases, and pertinent best practices. This will help you understand the basics as well as some of the more advanced-level uses of this popular Kubernetes tool.

What are DaemonSets?

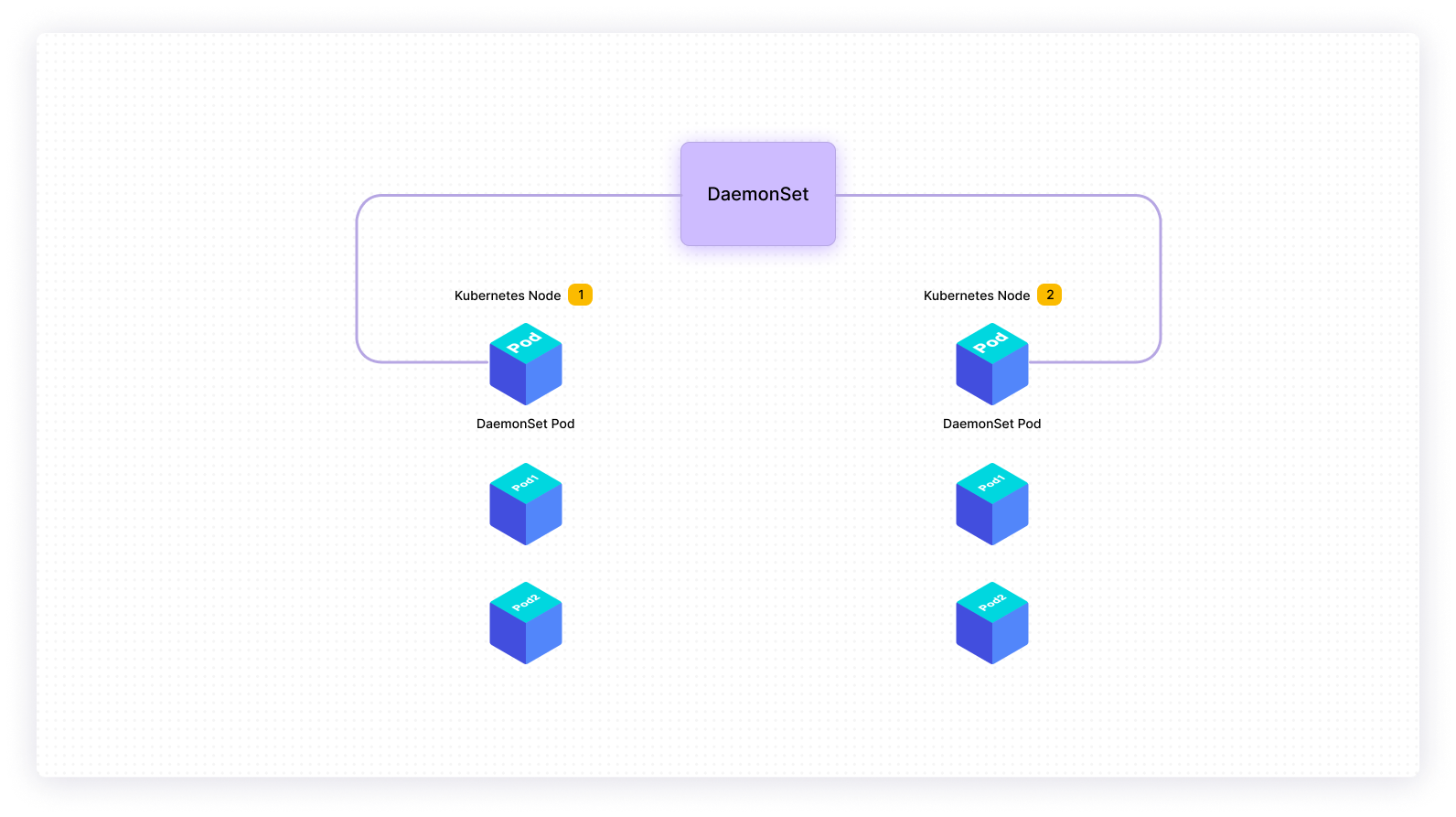

Essentially, DaemonSets are Kubernetes objects that allow you to exert greater control over application processes throughout your deployment. Why is this important? Certain applications and clusters have their own unique processes, and occasionally these background behaviors are isolated to certain nodes. Using the scheduler alone makes it more challenging to apply blanket processes across all cluster nodes simultaneously. By leveraging DaemonSets, you can specify crucial tasks and services across every active node. This has a few core applications:

- Maintenance

- Monitoring and logging

- Node and cluster oversight

Every cluster has a desired state that indicates how containerized applications should run; these states are tied to YAML configuration files and the Kubernetes API. However, desired states and observed states don’t always match. The same goes for deployed pods. When nodes have mismatching pods or aren’t performing to spec, then a DaemonSet will automatically create an appropriate pod within that node.

How does a DaemonSet know when performance isn’t up to expectations? Operational awareness ties back to the DaemonSet use cases—specifically, automated logging and resource monitoring. These processes are typically extended, meaning they occur over longer periods of time.

Although system health can vary at a moment’s notice, these ongoing processes make DaemonSets extremely valuable for observing systemic performance trends. The application of these DaemonSets is governed by DaemonSet controllers.

What makes DaemonSets so useful? There are some disadvantages to controlling daemons via their associated nodes alone. Daemon processes must run continuously as long as the node is active and not evicted. Additionally, deferring to the node can mean accidentally pairing daemons with mismatched runtime environments. Each daemon process should run properly within a container and remain aware of its place within the greater Kubernetes system. DaemonSets facilitate this awareness by comparison, where processes might otherwise be lost in the shuffle.

Creating a DaemonSet

As with many objects in Kubernetes, creating a DaemonSet is as simple as assembling a YAML configuration file. This will determine how daemon-related pods are applied across varying compatible nodes. The term “compatible” is appropriate here, since DaemonSet YAML files often specify some sort of toleration or condition allowing pods to schedule themselves on certain nodes. These pod-deployment rules directly impact the use of customized DaemonSets.

The Kubernetes documentation, however, only outlines a need for four specific YAML components when creating a functional DaemonSet: fields for apiVersion, kind, and metadata, plus a section for .spec. This latter section will include any tolerations, operator conditions, effects, container configurations, and terminationGracePeriodSeconds.

DaemonSet pods have pod templates, outlined via .spec.template. These templates must specify labels and have a RestartPolicy set to Always or unspecified.

Here’s what a YAML configuration file might look like if you use an Alpine Linux image:

Once you create the configuration file for your DaemonSet, you must apply it via kubectl. This requires a simple command, according to the file’s URL:

kubectl apply -f

https://respository.io/example/controllers/daemonlogging.yaml

This will manually apply your DaemonSet to all matching nodes, but all pod deployments will occur according to your specifications. In addition to tolerations, though, you’ll need to consider their counterparts, called taints. These properties let nodes reject sets of scheduled pods.

Taints work together with tolerations. If a taint satisfies a certain toleration, and conditions are a good match, then pods are scheduled successfully. These properties thus work in tandem to ensure nodes aren’t mismatched with the wrong pods. It’s also easy to evict pods that shouldn’t be running according to these configurations. This can naturally affect how DaemonSets are applied or if they can be used in a given scenario.

Node affinity and scheduling variations

Evictions aside, taints are useful for designating pods for dedicated nodes or nodes with specialized hardware. You also have to account for something called node affinity, that is, properties that attract pods to certain nodes. Within your YAML file, you can specify NodeAffinity using nodeSelectorTerms, including matchFields with keys, operators, and qualitative values. Note that the NodeAffinity specification replaces the default .spec.nodeName term. While affinity can be based on hard specifications, it may also be based on your selected preferences.

If you’d rather leverage the Kubernetes default scheduler to deploy DaemonSets, you’ll want to use NodeAffinity in conjunction with ScheduleDaemonSetPods. Otherwise, the system will deploy DaemonSets using the DaemonSet controller. Similar to node affinity, you can also match DaemonSets to nodes using specific node selectors. The .spec.template.spec.nodeSelector field instructs the DaemonSet controller accordingly.

Updating and removing a DaemonSet

A given DaemonSet will update itself automatically whenever node labels are changed; in response, the DaemonSet creates new pods and adds them to compatible nodes. On the other hand, it will also delete any pods that become mismatched as a result. While you can modify pod configurations, some fields cannot be changed.

This places de facto limits on the scope of your updates. Furthermore, the DaemonSet controller will defer to your original templates once new nodes are created. This does hamper adaptability to an extent; it may also be a reason to leverage the default Kubernetes scheduler, depending on your configurations and affinity specs.

Update strategies

That said, Kubernetes applies DaemonSet updates on a rolling basis. The rolling method is more controlled and doesn’t wipe everything at once, so that the update process occurs in stages. Only one DaemonSet pod will run on a given node while the update progresses. Meanwhile, the OnDelete update process dictates that new DaemonSet pods are only created once old pods are deleted. This method is comparatively more tedious, but it does offer a degree of control to admins who prefer stricter processes.

Designating a strategy is fairly simple within your DaemonSet YAML file. All you need to do is create an updateStrategy field, with your specific update type denoted underneath. The updateStrategy fields live within your overarching .spec section, notably before your template fields and your tolerations sub-specification.

For the rolling strategy—which is the default—some other properties are helpful. For instance, you may specify a maximum number of unavailable pods during the process, using .spec.strategy.rollingUpdate.maxUnavailable, or a percentage. This limits the active impact of your updates, ensuring that your system won’t be hobbled while they are running. You can also set a deployment timeout limit, after which Kubernetes will attempt to apply your updates (and create your new pods) once more.

How to delete a DaemonSet

Deleting a DaemonSet is also relatively straightforward. The --cascade=false conditional within kubectl will ensure that pods remain within their nodes, but DaemonSets will replace these pods on the basis of their unique selectors in keeping with your update strategy.

You can delete a DaemonSet within kubectl by using the following command:

kubectl delete -f daemonset.yaml

This command will also clean up any pods created via the DaemonSet, which is something to keep in mind.

Examples of DaemonSet usage

Logging is one clear use case for a DaemonSet. Visibility and monitoring are everything when it comes to measuring Kubernetes’ performance or health. Collecting logs on each node is essential to retroactive performance analysis. Because DaemonSets run on all applicable nodes, they are natural conduits for capturing those critical events as they occur. You can run a node-level logging agent as a DaemonSet, which exposes logs and sends them to a designated backend. This directory of log files remains accessible and catalogs all events from node application containers. This is a favorable setup because no application changes are needed to facilitate it.

Similar to logging agents, the DaemonSet also helps third-party monitoring agents run within each target node. You might have heard of Prometheus, Datadog, or AppDynamics, each of which offers its own connected Kubernetes monitoring solution that you can deploy universally. Specifically, the Prometheus Node Exporter set allows Kubernetes to export crucial metrics to a remote backend that connects teams with aggregated data in dashboards.

Alternatives to DaemonSets

Though DaemonSets are extremely capable, there are other ways to achieve similar workflows within Kubernetes. You could use init scripts to automatically run daemon processes on node startup. You could also leverage both bare pods (directly and tied to specified nodes) and static pods (writing files to directories). These methods have their drawbacks, however, and official documentation might even discourage it.

As you use DaemonSets

DaemonSets are a powerful tool to control the automation and streamlining of many tedious Kubernetes processes. Harnessing these objects is fairly user friendly, and the level of configurability available makes them full-fledged solutions for your nodes. Their deployment hierarchy is easy to grasp, and they’re just as easy to deploy as they are to remove. Like Deployments and StatefulSets, DaemonSets are one of the most popular controllers within the Kubernetes system.

As you begin to use this important controller, remember to ensure that your tags, labels, and YAML metadata are configured appropriately. If you follow best practices and ensure your use cases align with DaemonSet capabilities, you’ll be able to get the most out of your DaemonSets.

If you're looking for a powerful platform that makes it easy to build custom internal tools to help with application management and troubleshooting, then check out Airplane. You can transform scripts, queries, APIs, and more into powerful workflows and UIs using Airplane. Use Airplane for your engineering workflows, such as deployment pipeline dashboards, incident management centers, and database dashboards.

To try it out, sign up for a free account or book a demo.