Kubernetes is a widely popular container orchestration tool, but its complexity can cause a number of problems as well. DevOps and cloud engineers deploying workloads to Kubernetes frequently deal with issues from failed health checks and unhealthy nodes to being unable to bind a volume to a name.

Luckily, Kubernetes has an active user base. Members of the community will readily answer questions and offer resources to help you through any situation.

This article will focus on one error message in particular: “failed to create pod sandbox”. You’ll learn what causes it to pop up when you’re creating a pod and how you can troubleshoot this error.

What causes FailedCreatePodSandBox?

This error usually occurs due to some issues with the networking, although it can also be caused by suboptimal system resource limit configuration. Since networking is one of the most complex parts of a Kubernetes setup, figuring out the exact cause requires you to have a good understanding of Kubernetes networking.

If pods are stuck in the ContainerCreating state, your first step is to check the pod status and get more details with the kubectl describe podname command. The output should provide a detailed error message, which you can use as a baseline for further investigation.

It’s important that you’re familiar with such error messages. In a production environment, you will need to fix the issue as soon as possible, and understanding common error messages will help you find the root cause quickly.

Following are all the possible messages you could see related to this error, as well as possible root causes and methods for fixing them.

Scenario 1: CNI not working on the node

The Kubernetes Container Network Interface (CNI) configures networking between pods. If CNI isn’t running properly on the nodes, pods can’t be created because they will be stuck in the ContainerCreating state.

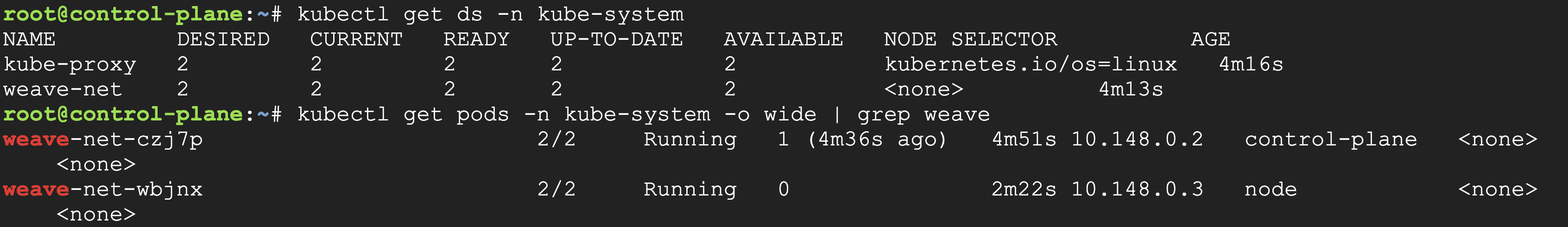

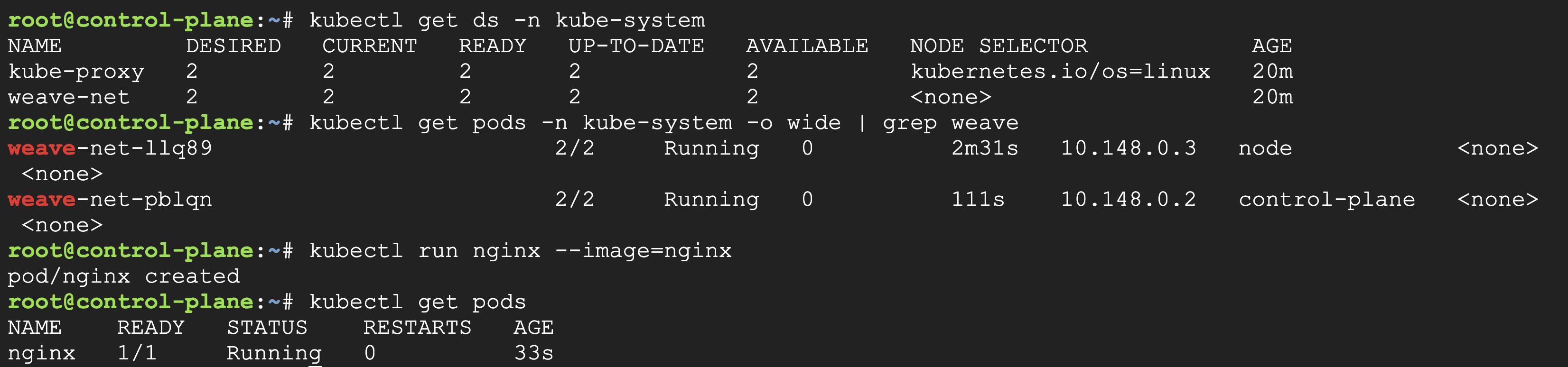

Here’s how to simulate, troubleshoot, and fix this issue. Say you have a two-node (one control plane, one node) kubeadm cluster running on Kubernetes version 1.23.4, with Weave Net as your CNI. Weave Net runs as a DaemonSet.

To simulate the issue, you’ll prevent the Weave Net from running on your node. Follow these steps:

Step 1

Label your control plane node with label weave=yes (control-plane is your node name):

Step 2

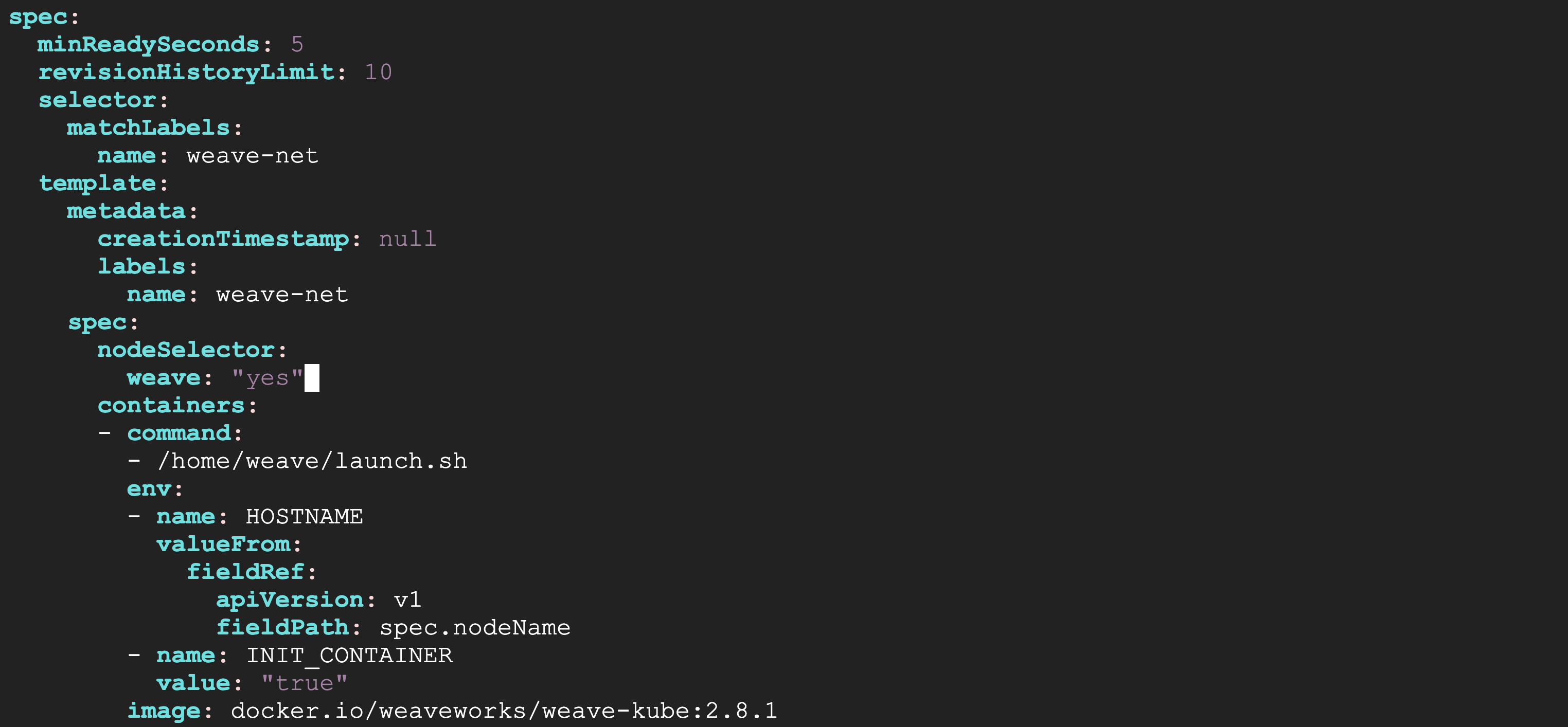

Edit the weave-net DaemonSet and add a node selector under spec.template.spec. This node selector will use label weave=yes, which ensures the CNI pod only runs on the control plane node:

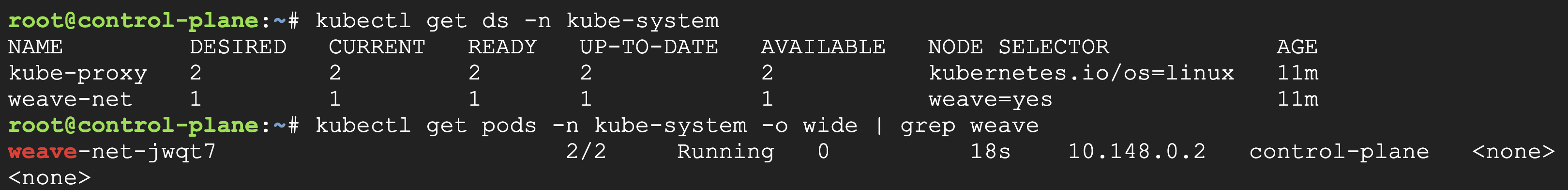

The CNI pod should be running only on the control plane node.

Step 3

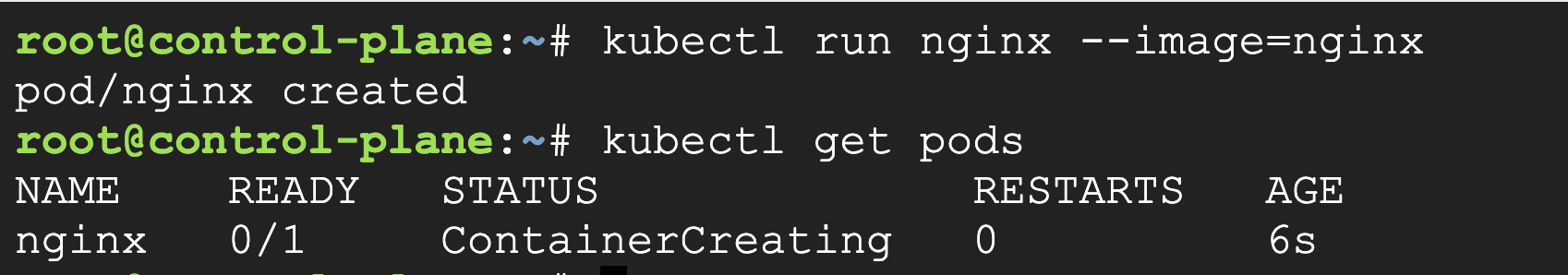

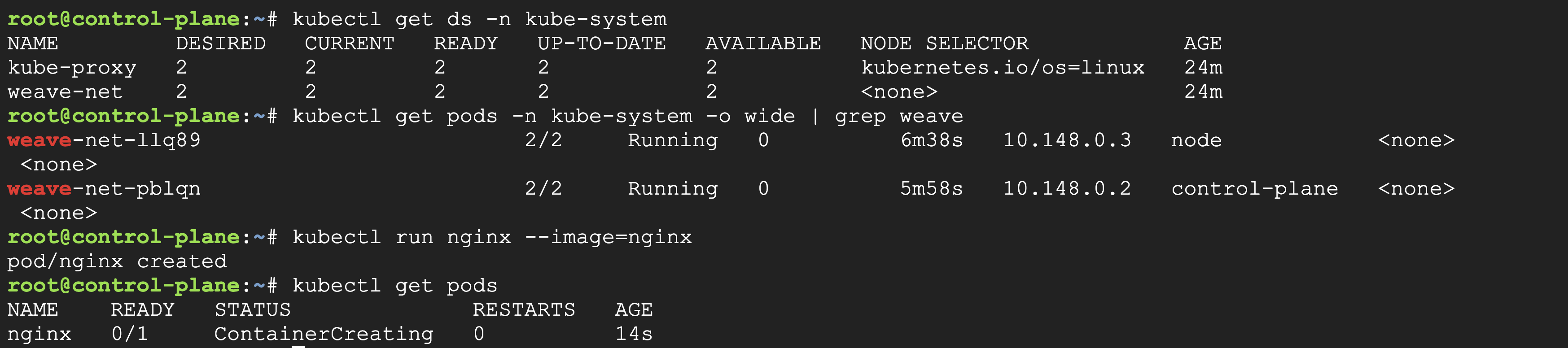

Now, try to run a pod using kubectl run nginx --image=nginx. If you check the pod status, it should be stuck in the ContainerCreating state.

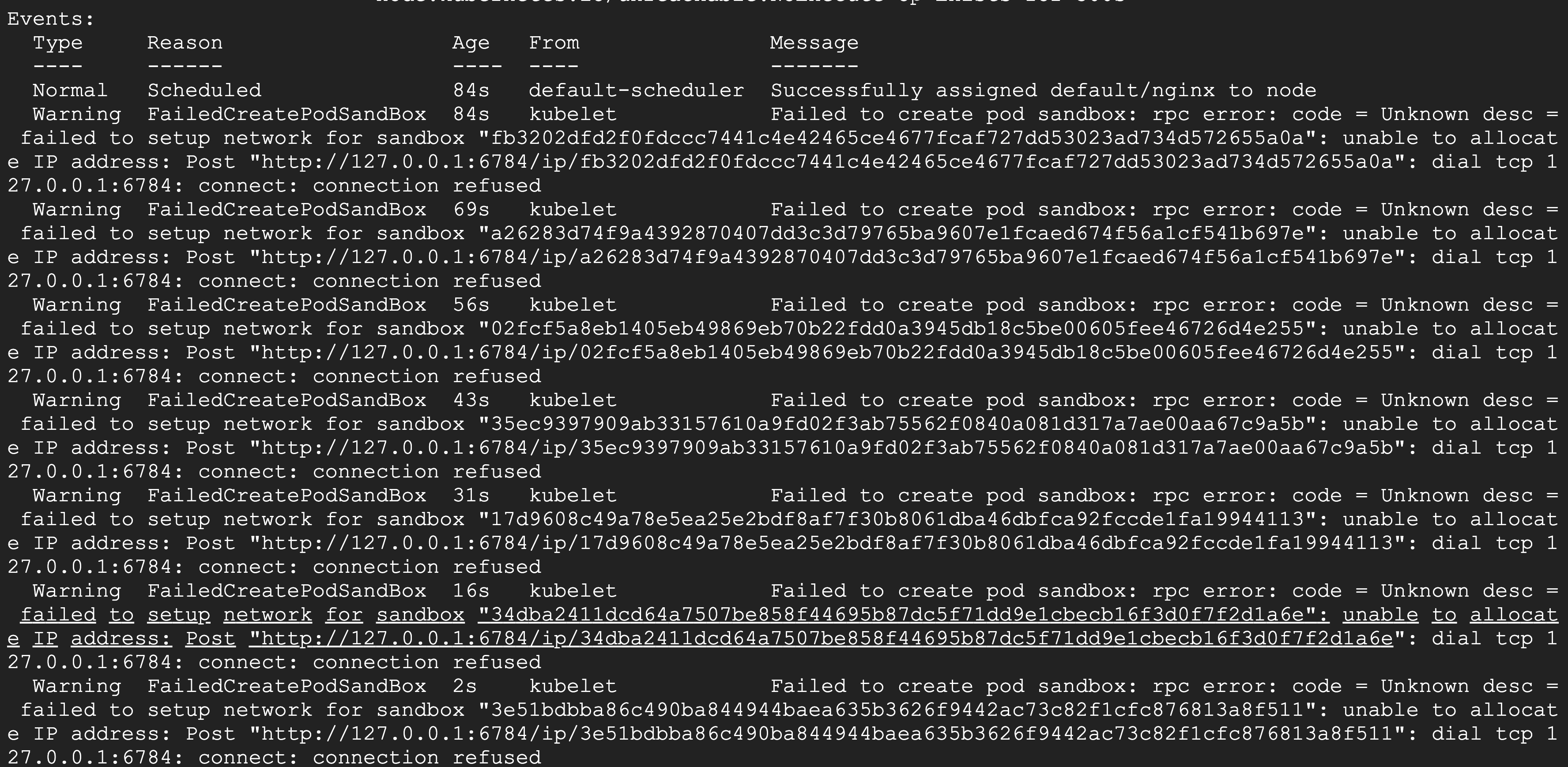

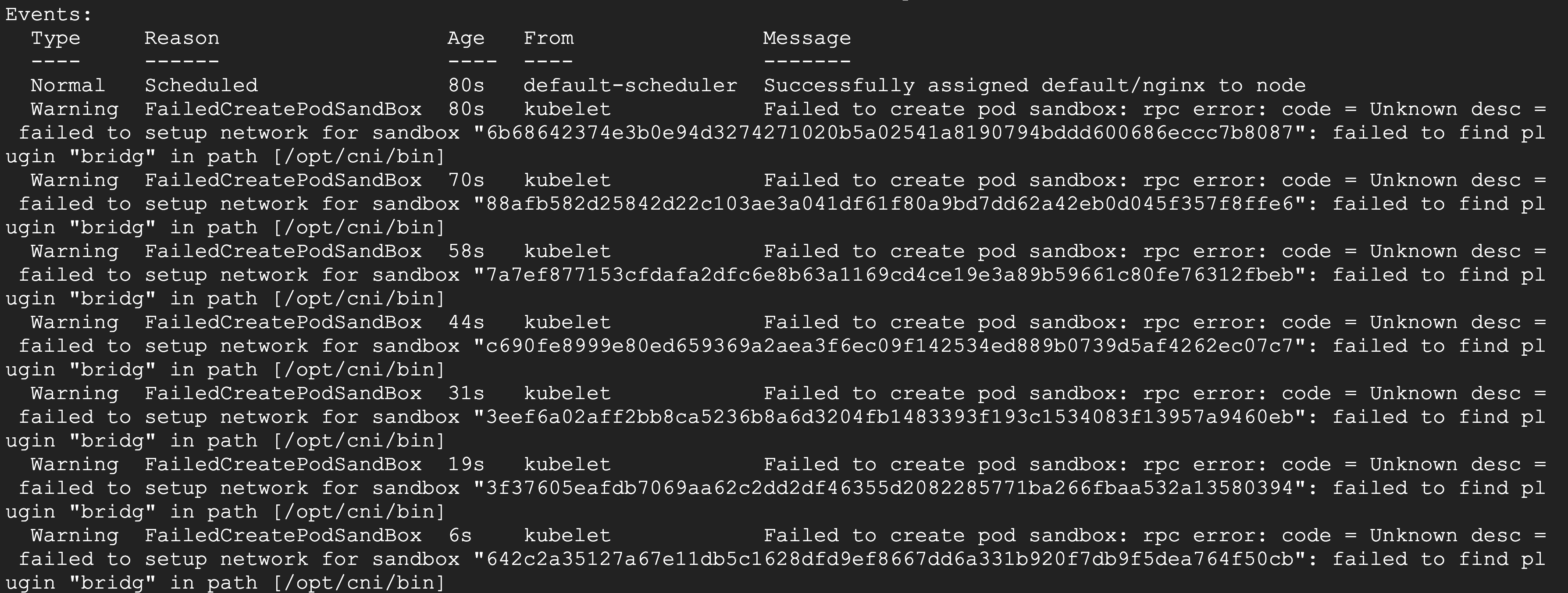

If you run the command kubectl describe pod nginx, you’ll see the error message “FailedCreatePodSandBox: failed to setup network for sandbox”.

Debugging and resolution

The error message indicates that CNI on the node—where nginx pod is scheduled to run—is not functioning properly, so the first step should be to check if the CNI pod is running on that node. If the CNI pod is running properly, you’ve eliminated one possible root cause.

In this case, once you remove the nodeSelector from the DaemonSet definition and ensure the CNI pod is running on the node, the nginx pod should be running fine.

Scenario 2: Missing or incorrect CNI configuration files

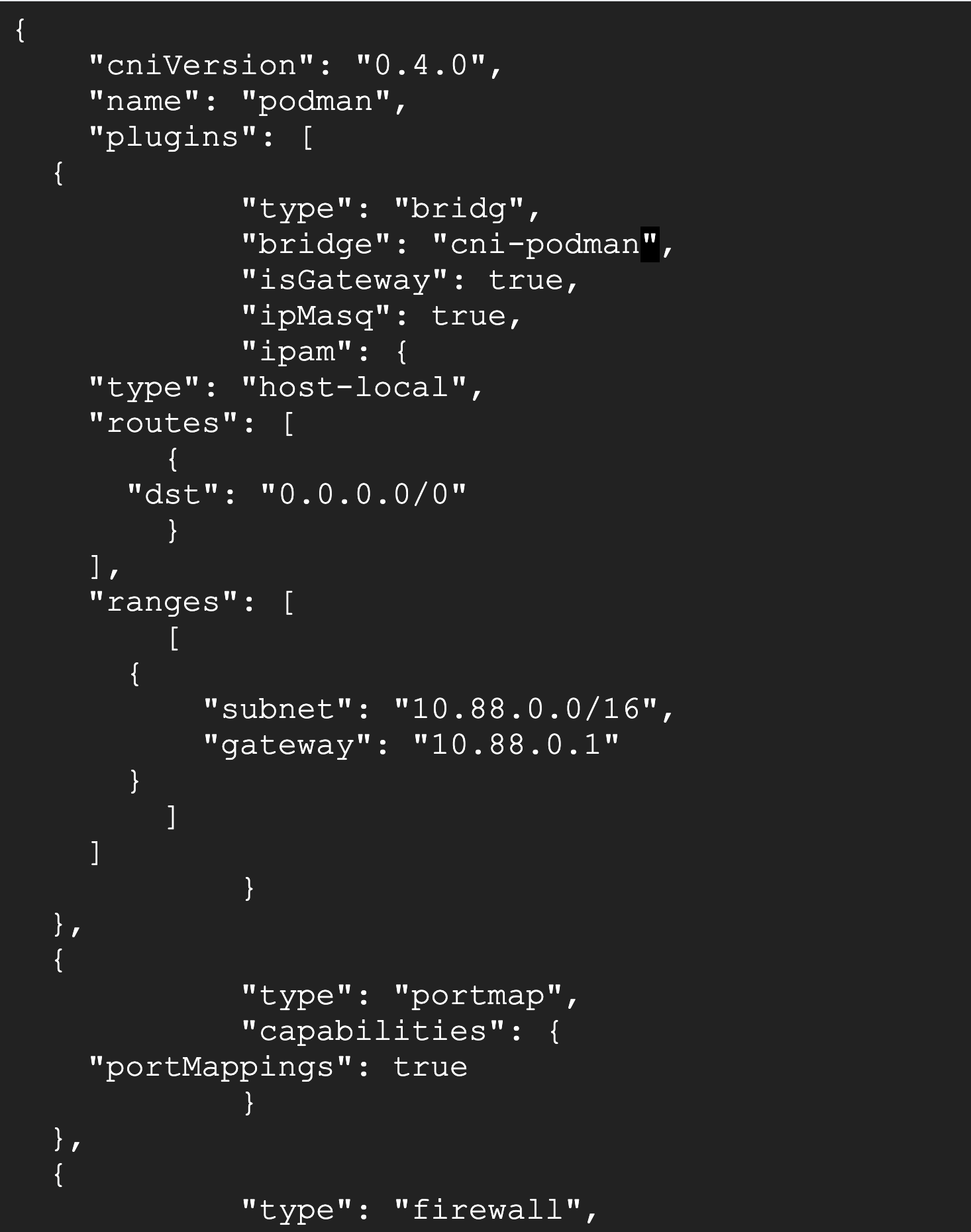

Even if the CNI pod is running, you may still experience problems if the CNI configuration files have errors. To simulate this, you’ll make some changes in the CNI configuration files, which are stored under the /etc/cni/net.d directory.

Step 1

Log in to the node and run the following commands:

Change bridge to bridg and cni-podman0 to cni-podman.

At this point, the Weave pod will still be running on the node.

Step 2

If you run the kubectl run nginx --image=nginx command again, the pod will be stuck in the ContainerCreating state.

Step 3

Check pod status with the kubectl describe pod nginx command, and you’ll see the error message: failed to find plugin "bridg" in path [/opt/cni/bin].

Debugging and resolution

In this scenario, the CNI pod is running on the node, but you’re still facing the same issue. The next logical step is to verify the CNI configuration files. You can check the configuration files of other nodes of the cluster and verify if those files are similar to the ones in the problematic node. If you find any issue with the configuration files, copy the configuration files from the other nodes to that node and then try recreating the pod.

Scenario 3: Insufficient number of available IP addresses

You’ll use an AWS EKS cluster to demonstrate this issue since it’s easy to simulate this on a cloud environment. Here, the VPC where your EKS cluster is running has a limited number of IP addresses available.

Step 1

Connect to the EKS cluster and create some pods using the following command:

Step 2

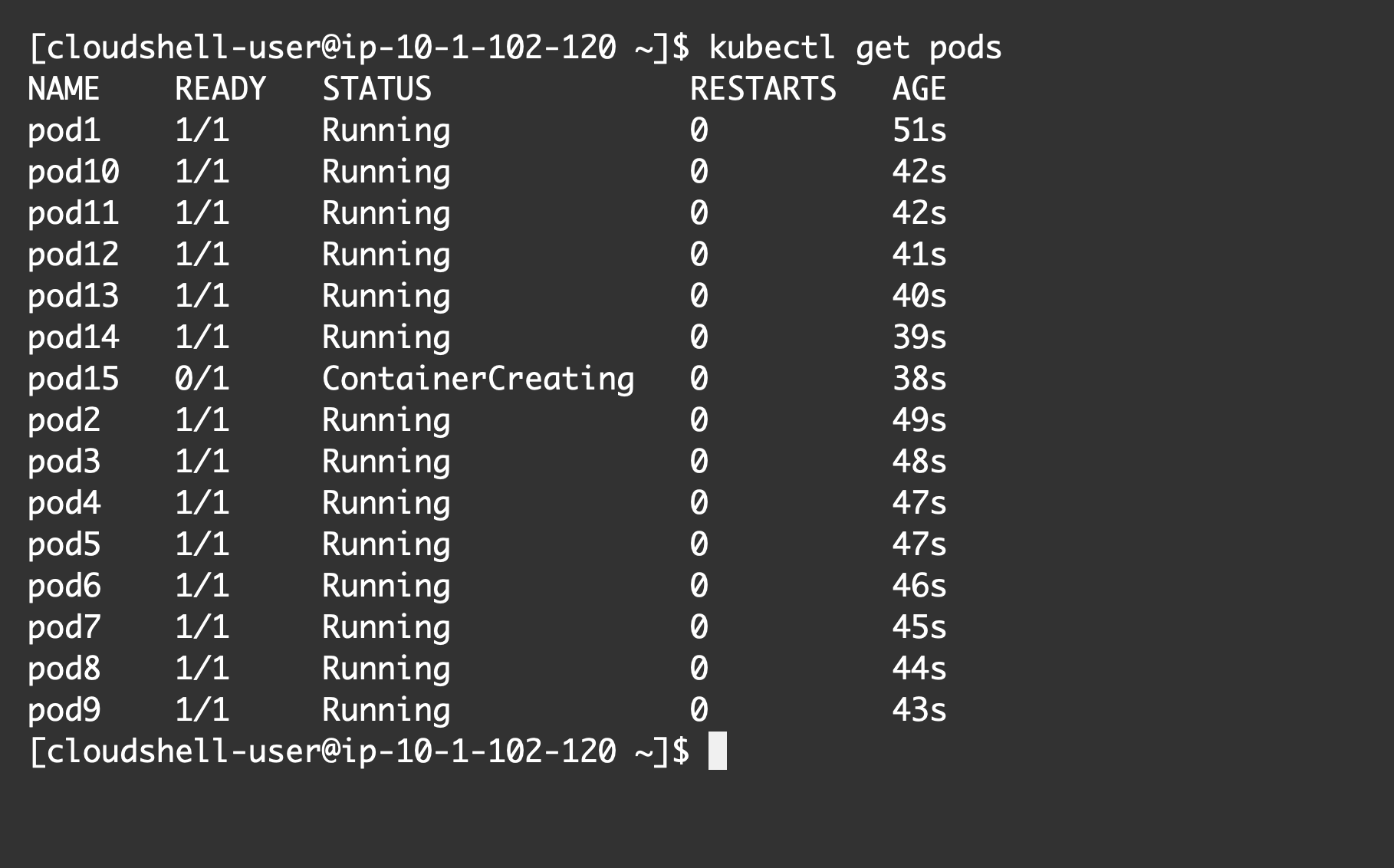

Check the status of your pods. You’ll see that all but one pod (in this example, pod15) is running.

Step 3

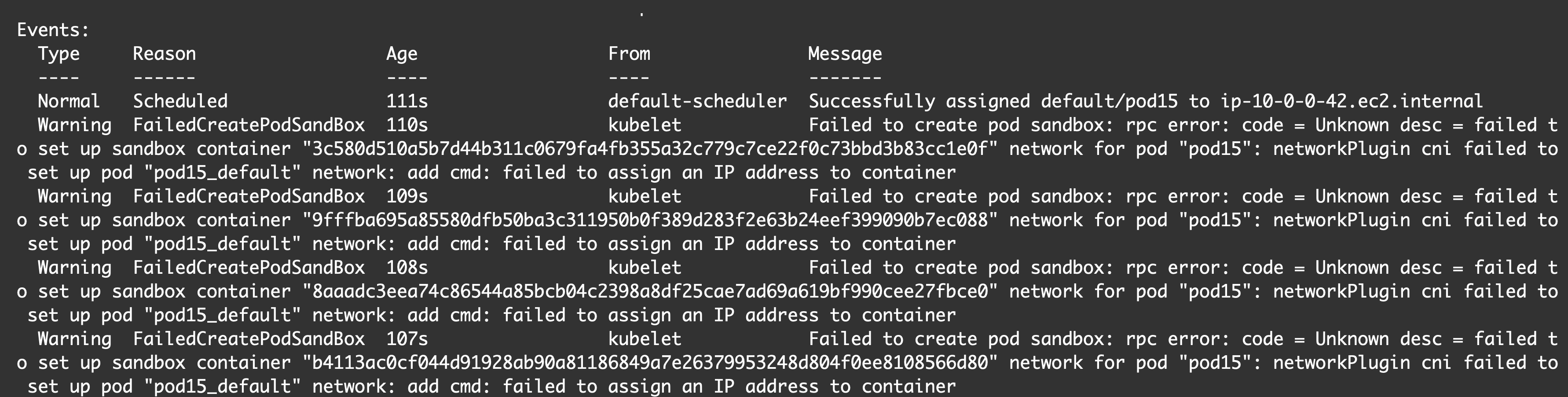

If you check the status of the pod in question by running the command kubectl describe pod pod15, you’ll see it wasn’t able to get an IP address.

Debugging and resolution

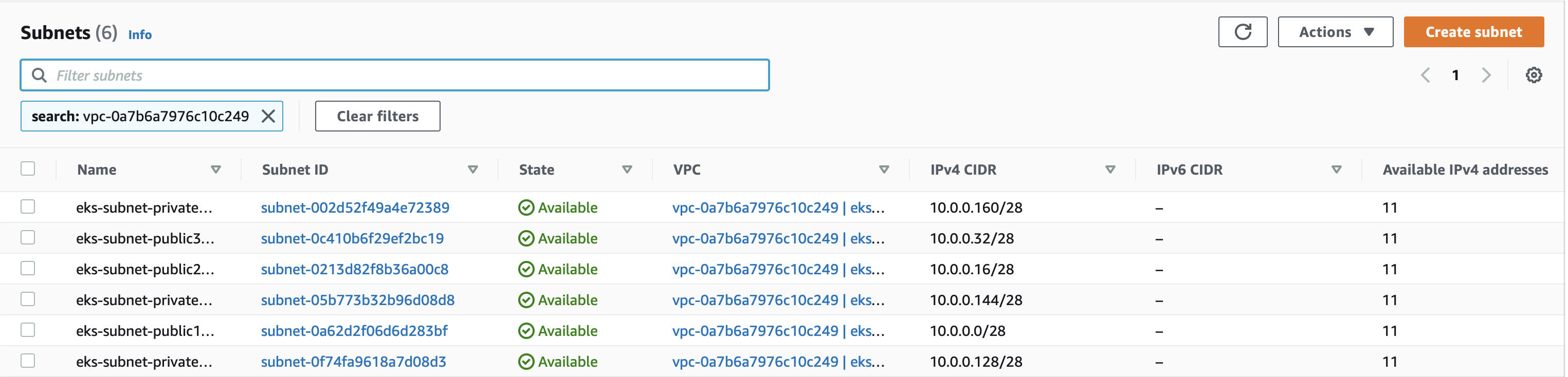

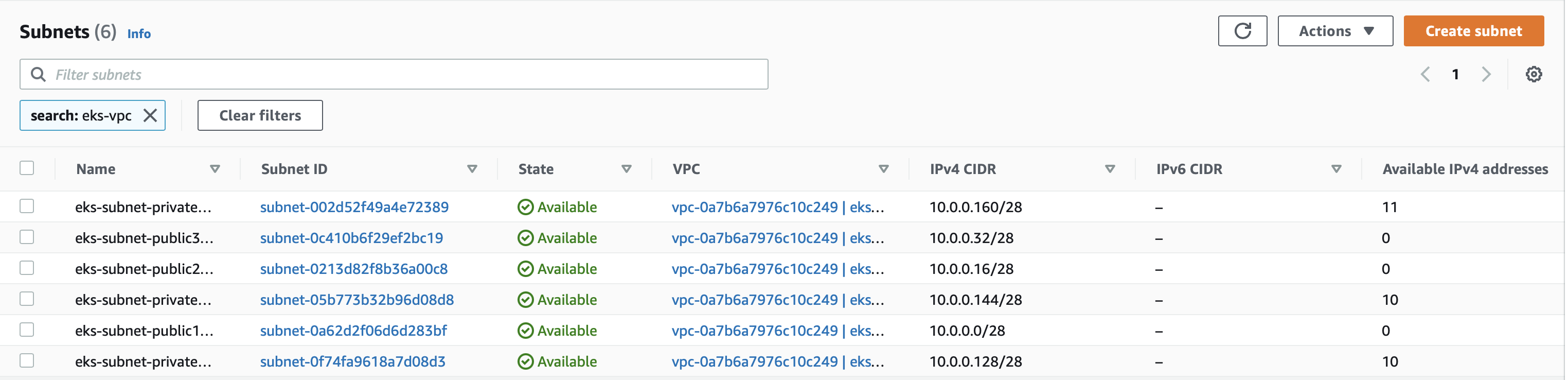

This problem happens when the VPC subnet runs out of available IP addresses. Here, you are checking from the AWS VPC console, and none of the subnets where your nodes are running has an available IP.

To fix this issue, you can either scale down some workloads by running fewer pods, or you can create new subnets with wider CIDR ranges to run the EKS cluster. The best solution is to always plan the VPC and subnet CIDR range ahead so that there are enough IP addresses available for the predicted workload.

Scenario 4: Suboptimal system resource limits configuration

As noted earlier, the networking issue isn’t the only root cause of this error—suboptimally configured system resource limits may be another reason. To demonstrate this, switch back to your kubeadm cluster.

Step 1

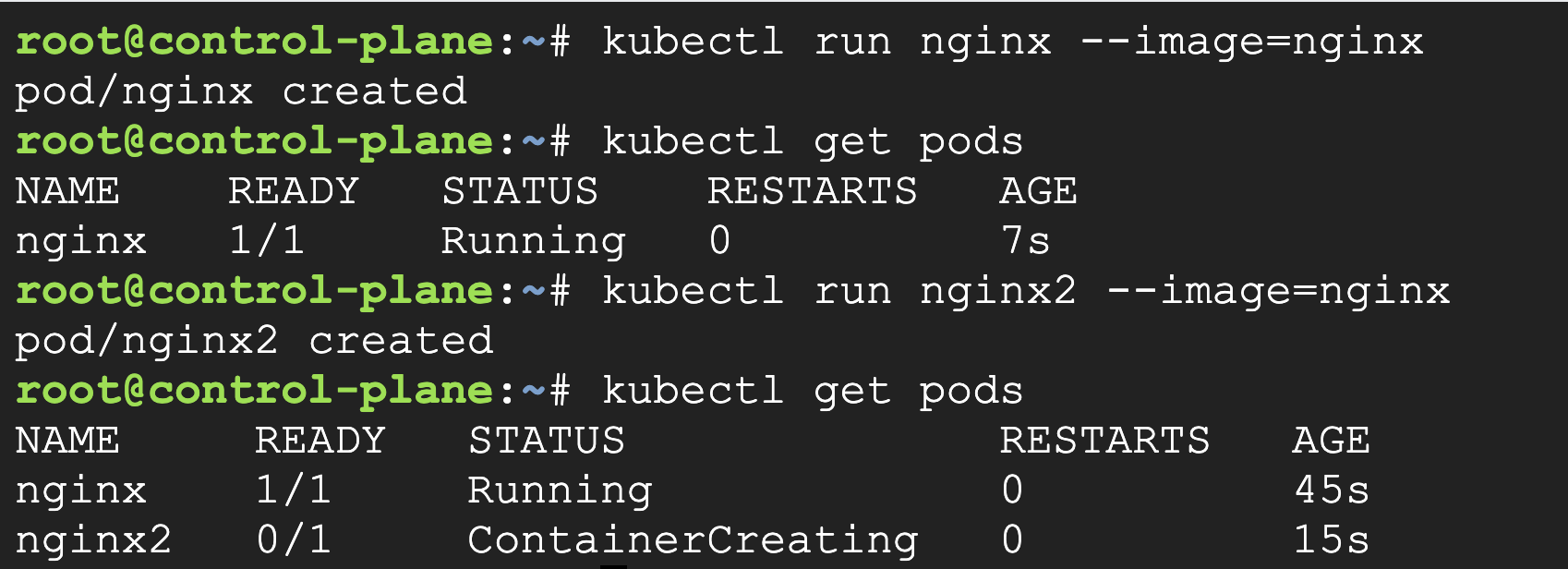

If you run the kubectl run nginx --image=nginx command, you’ll see it’s running fine.

Step 2

Connect to the node and run echo 32 > /proc/sys/fs/file-max. This will limit the maximum number of open files on the Linux host.

Step 3

Run the kubectl run nginx2 --image=nginx command and check the pod status. It will be stuck in the ContainerCreating state.

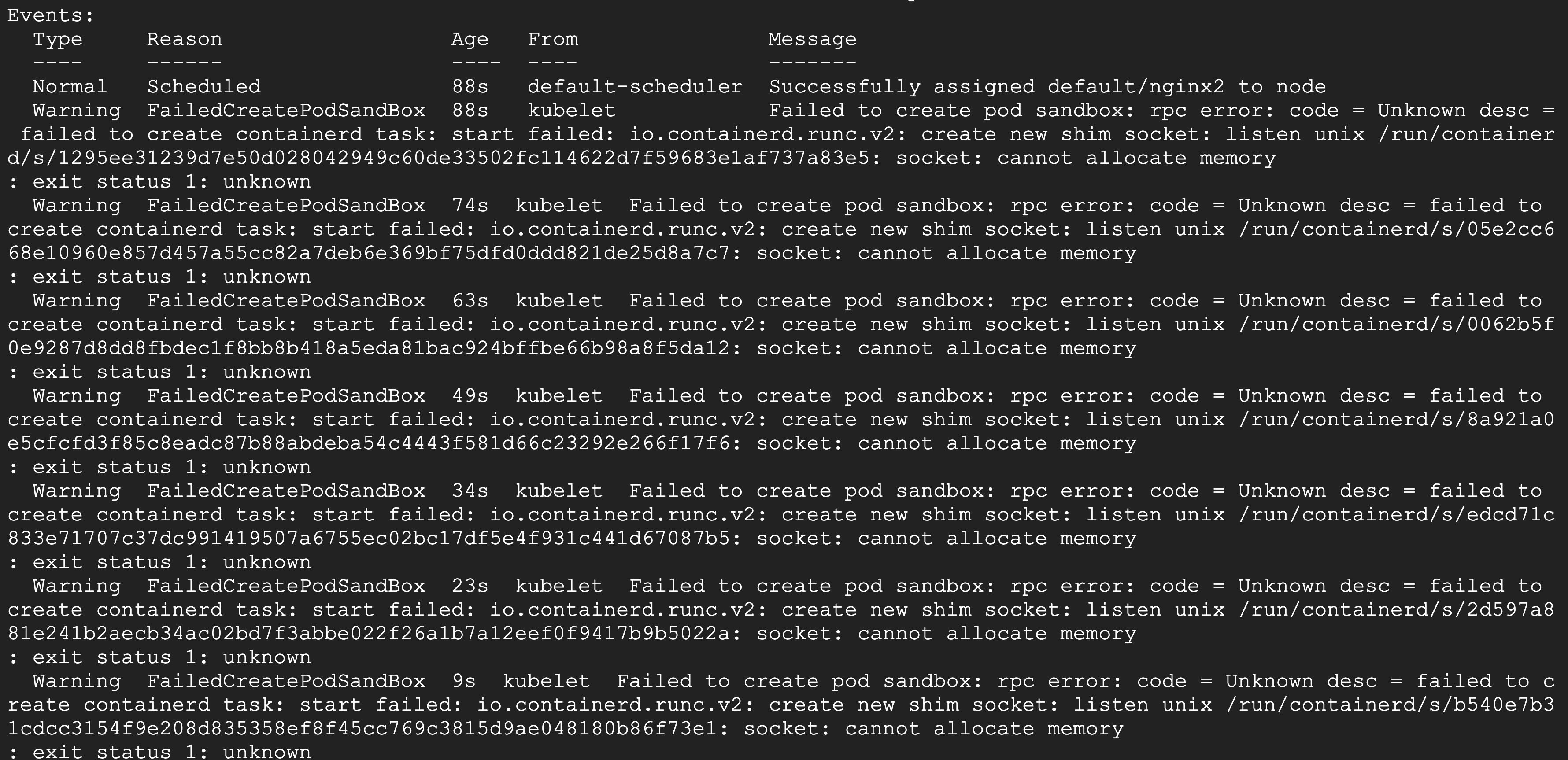

If you check the pod status with kubectl describe pod nginx2, you’ll see the pod encountered some issues when creating new shim sockets.

Debugging and resolution

If you see socket-related errors in pod status messages, you can rule out the possibility of networking-related issues and check the system resource limits configured on the node. Make sure the maximum number of open files and processes is configured appropriately. If the number is too low, raise it to a sufficient value, and if there are some zombie processes, terminate them.

In this scenario, if you increase the open file limit by running the command echo 4096 > /proc/sys/fs/file-max, your pod will be running fine.

Final thoughts

As you’ve seen, there are several different scenarios that can cause the FailedCreatePodSandBox error when you attempt to create a pod. You’ve also seen how misconfigured networking often plays a role in this error, although it may not be the only culprit.

In general, if you see the FailedCreatePodSandBox error, first check if CNI is working on the node and if all the CNI configuration files are correct. You should also verify that the system resource limits are properly set. If the cluster is running on a cloud environment, check if the network subnets have a sufficient number of IP addresses available. These details should help you solve this issue and avoid it in the future.

If you’re looking for a way to build a monitoring dashboard or processes to catch errors and failures, try using Airplane. With Airplane, you can transform scripts, queries, APIs, and more into powerful workflows and UIs. Airplane also launched Engineering Workflows designed to help with engineering-centric use cases. Airplane also offers Views, which is a UI framework that helps users build UIs within minutes.

To build a debugging dashboard within minutes using Airplane, sign up for a free account or book a demo.