Your new PR campaign has exploded, and the requests are crushing your application server. You need to scale your service—quickly—to accommodate the millions of users joining in. In situations like these, load balancers are a crucial tool. They’ve enabled the web to scale to the heights it has reached today, serving millions without a hitch.

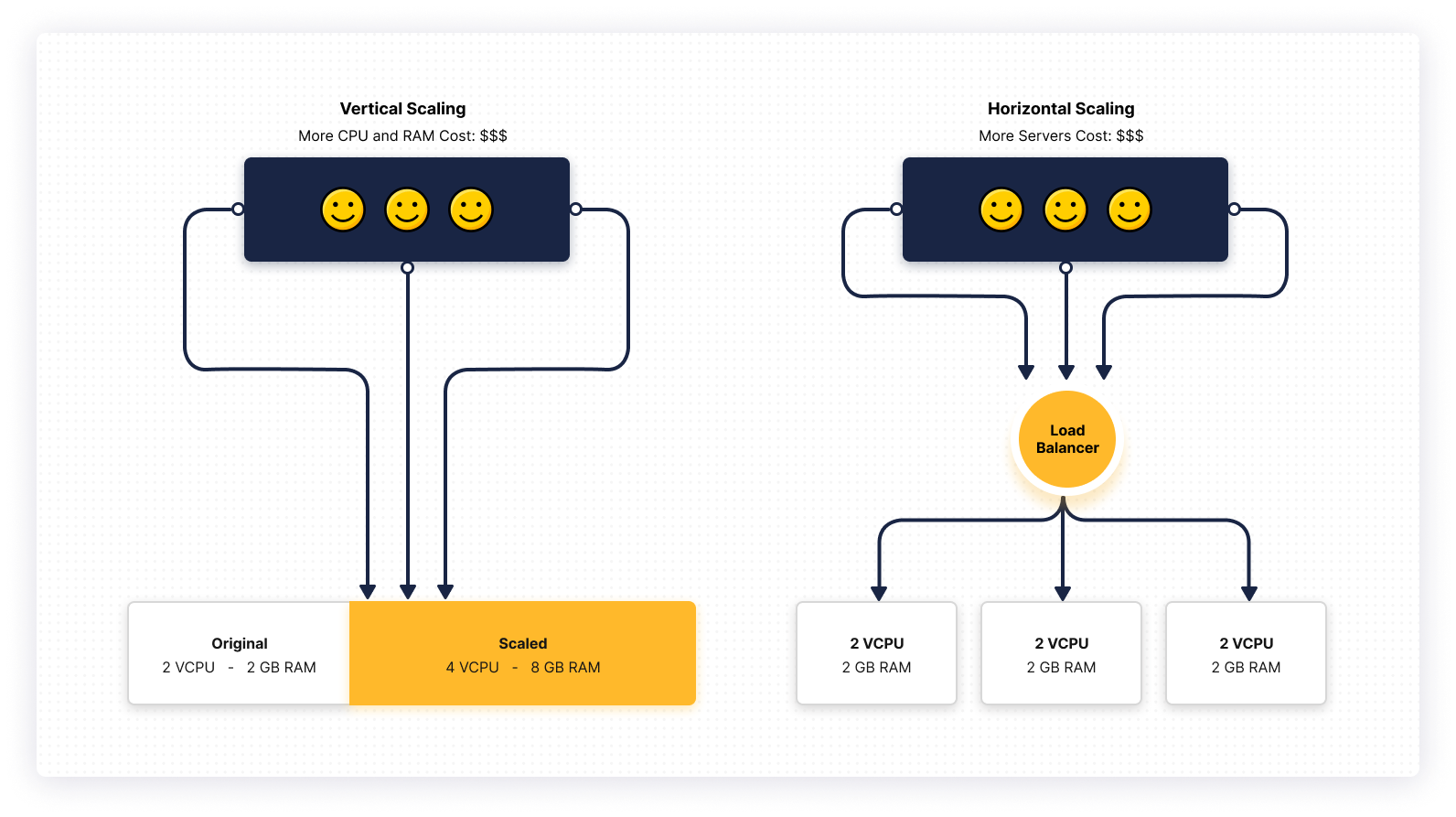

A load balancer takes a request or a connection and distributes it across a pool of backend servers. As the load increases, the ability of a single application server to handle requests efficiently becomes limited by the hardware’s physical capabilities. This can be managed by scaling the application, either vertically or horizontally.

Vertical scaling works by adding hardware resources to the server, such as CPU cores and memory, to handle more requests. However, vertical scaling has physical limits, and quickly becomes cost prohibitive. Horizontal scaling works by adding more servers to the mix and making multiple servers handle the requests, and load balancers are central to horizontal scaling. Scaling your application horizontally is highly effective and low cost, but can come with various technical challenges.

Types of load balancers

Load balancers can be differentiated by how they’re deployed and where they’re used.

Hardware load balancers

These are hardware appliances built using accelerators such as application-specific integrated circuits (ASICs) or field-programmable gate arrays (FPGAs). This reduces the burden on the main CPU processing requests. You can buy them from vendors such as F5 or Citrix and deploy them on your premises. They’re strong performers, but require hands-on management, and because they’re hardware based, they can be difficult to scale, decreasing the flexibility of the system.

Cloud load balancers

Public cloud platforms such as AWS, GCP, Microsoft Azure, and others offer high-performance load balancers hosted in their data centers, offering the performance of hardware load balancers with the cost and convenience of the cloud. Cloud load balancers are inexpensive, and charges are based on usage. For example, AWS charges for Elastic Load Balancing by the hour, and can cost as little as $20 per month.

Software load balancers

Software load balancers can be deployed and managed on your own servers. There are both commercial and open source software load balancers. Software load balancing is very flexible, and can be easily deployed on commodity servers, co-exist with other services not requiring dedicated servers, and offer easy instrumentation and debugging capabilities.

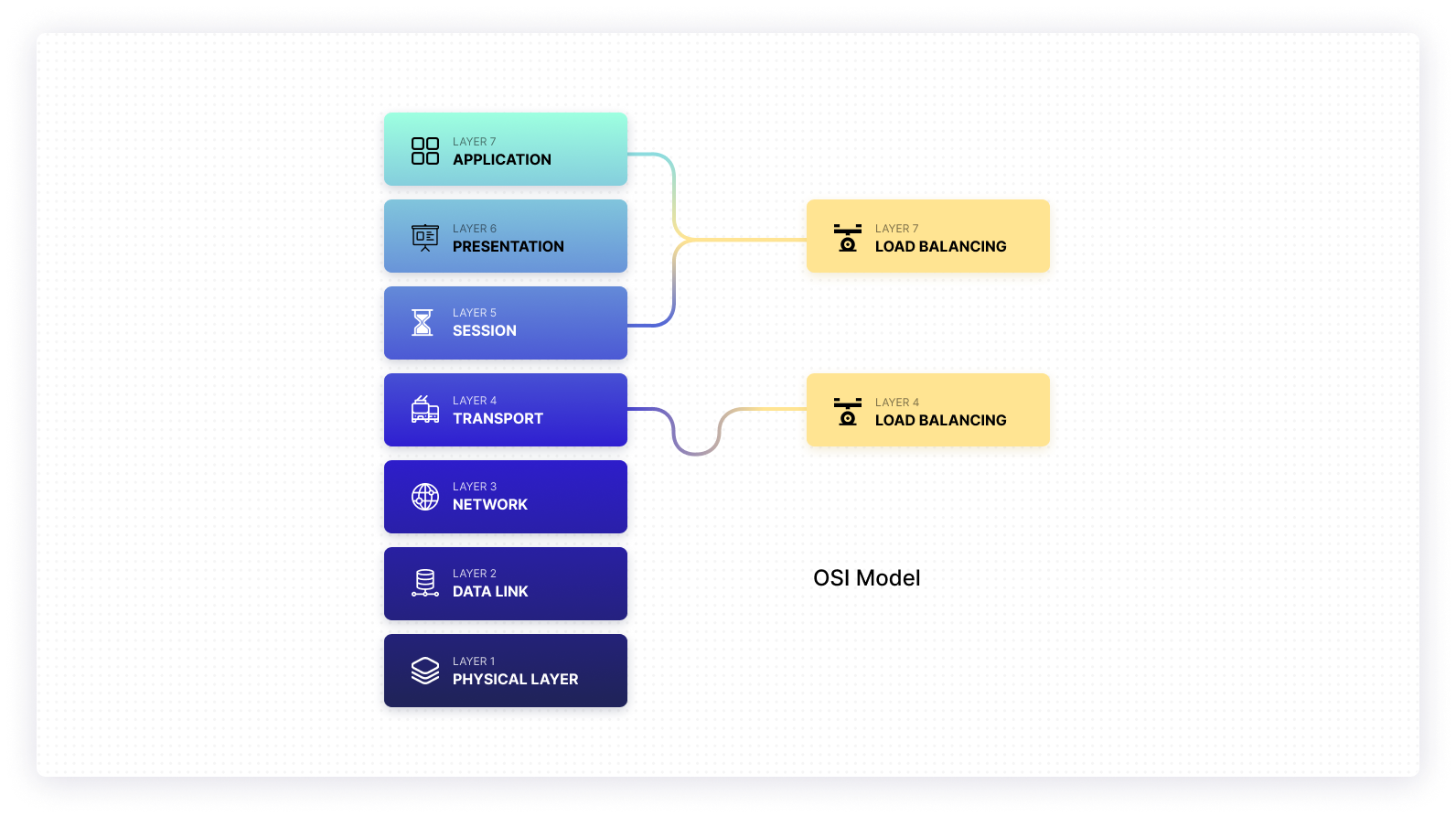

Load balancers can also be distinguished by the kind of requests they handle.

Following the OSI model, load balancers are implemented in either layer 4 or layer 7. A request in a load balancer is TCP or UDP connections for L4 load balancers, or HTTP requests for L7 load balancers. Choosing the right layer of load balancers depends primarily on your application’s use case.

A simple web app would operate only HTTP web servers, and L7 load balancing would satisfy its requirements. In contrast, an IoT application operating MQTT servers on the internet would do better with an L4 balancer.

Open source load balancers

Open source software has become synonymous with high quality, reliability, flexibility, and security. Load balancers are no exception to this. While the closed source load balancers offered by AWS, GCP, and Azure are fast and reliable, they offer limited customization and can cause lock-in. On the other hand, open source load balancers provide best-in-class performance and multiple deployment options, including both cloud and on-prem support. In addition, popular open source software projects have the advantage of large communities driving their use. This is especially useful for crucial components such as load balancers, making it easy to build a production-ready setup using established best practices and community knowledge.

Here’s an overview of the top open source load balancers:

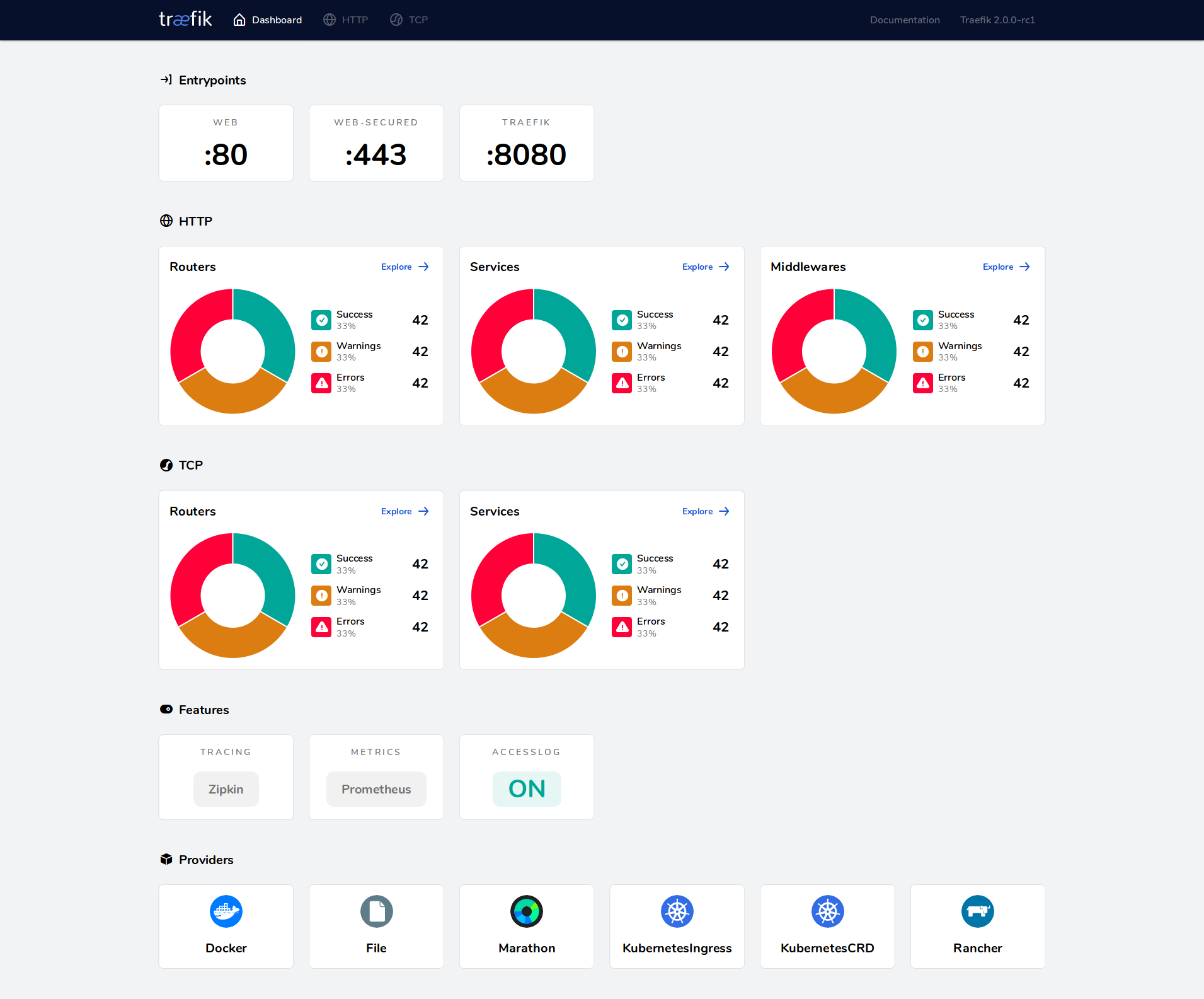

Traefik

Traefik is a reverse proxy and L7 load balancer. Written in Go, it’s designed to support microservices and container-powered services in a distributed system. It has native support for Docker Swarm and Kubernetes orchestration, as well as service registries such as etcd or Consul. It also offers extensive support for WebSocket, HTTP/2, and gRPC services. Traefik integrates well with popular monitoring systems such as Prometheus and Datadog, and provides metrics, tracing information, and logs for effective monitoring. A REST API enables a real-time control panel. Traefik Labs also offers an enterprise version of Traefik, with features such as OpenID and LDAP authentication.

Haproxy

Haproxy is an L4 and L7 load balancer supporting TCP and UDP traffic. It’s a well-established, open source solution used by companies such as Airbnb and GitHub. Haproxy is also a very capable L7 load balancer, supporting HTTP/2 and gRPC backends. Thanks to its long history, large community, and reliable nature, Haproxy has become the de facto open source load balancer—it comes preloaded on many Linux distributions, and is even deployed on many cloud platforms.

NGINX

NGINX is a battle-tested piece of software written in C and initially introduced in 2004. Since then, it has grown to be an all-in-one reverse proxy, load balancer, mail proxy, and HTTP cache. It offers L7 load balancing support for HTTP, HTTPS, FastCGI, uWSGI, SCGI, Memcached, and gRPC backends. Nginx’s process worker model is highly scalable and deployed at large scales in organizations such as Adobe, Cloudflare, and OpenDNS. While Haproxy and Nginx are both robust, well-respected solutions, Haproxy is limited to only load balancing and proxying capabilities, whereas Nginx can also be used as a high-performance file server.

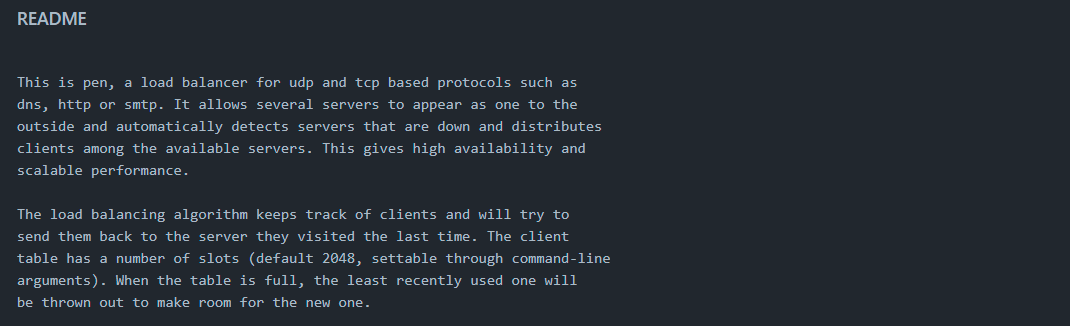

Pen

Pen is an L4 load balancer written in C that supports TCP and UDP traffic. It is tested on Windows (as a service), Linux, FreeBSD, and Solaris, but should work on any Unix-based system. It implements a tweaked round-robin algorithm that keeps track of clients and sends them back to the server they visited earlier. Client stickiness to the same backend server is especially important for consistency in such distributed systems.

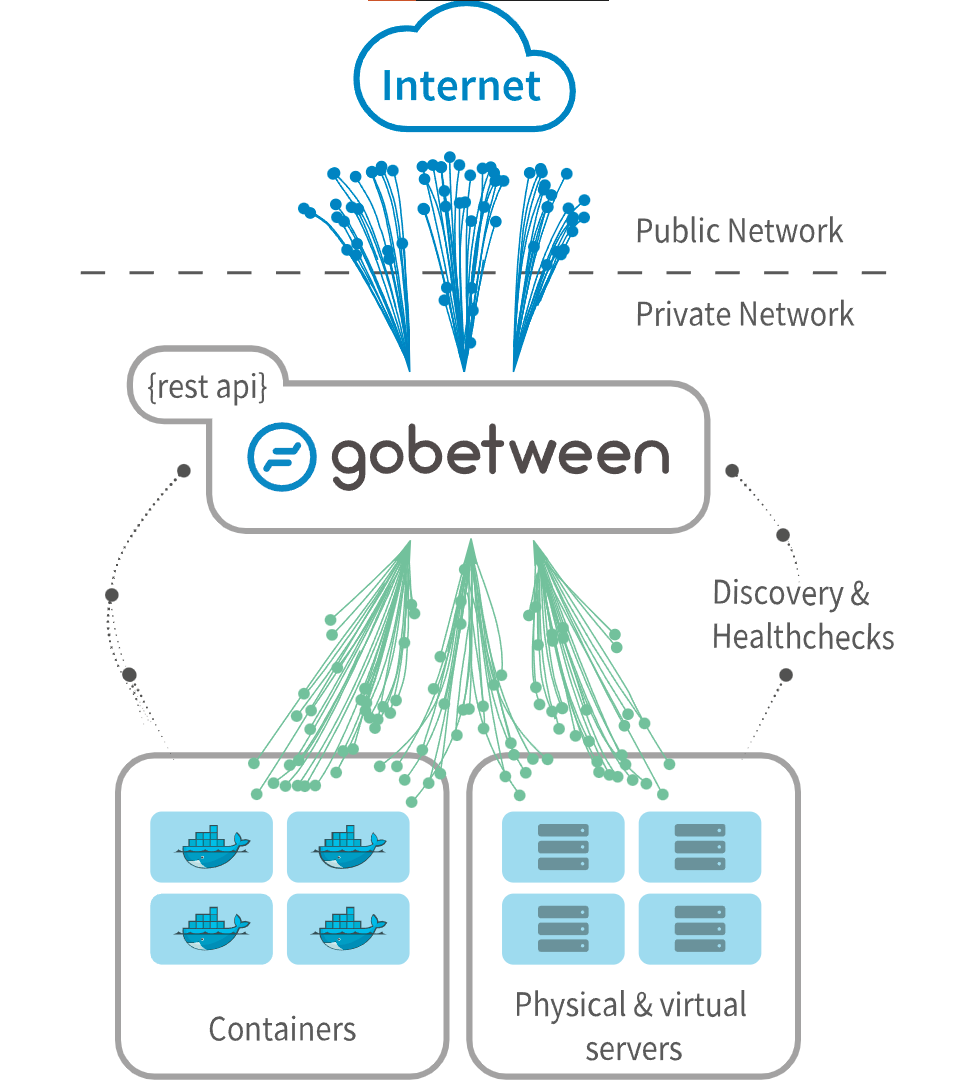

Gobetween

Gobetween is a container- and microservice-focused L4 load balancer and reverse proxy. Tested on Windows, Linux, and Mac, deploying Gobetween is straightforward in native and containerized environments, requiring only a single executable binary and a JSON config file. Similar to Traefik, it offers real-time monitoring and management via a REST API. Service discovery is supported by various means, including DNS SRV records, Docker and Docker Swarm, Consul, and more. Gobetween is designed to simplify the automatic discovery of services in a microservices environment.

OpenELB

OpenELB, formerly known as Porter, is a Cloud Native Computing Foundation (CNCF) incubated project designed for exposing LoadBalancer type Kubernetes resources in bare-metal, edge, and virtualized environments. Services in Kubernetes are exposed via LoadBalancer resources. Usually, a load balancer in a cloud vendor Kubernetes deployment would be one of the vendor-operated cloud-based load balancers noted previously. Openelb is an open source implementation that brings the same seamless experience of managing Kubernetes service closer to bare-metal deployments.

MOSN

MOSN is an L4 and L7 load balancer designed for cloud native and service mesh environments. It’s designed to be used with the xDS API to automatically discover services supported by various service meshes such as Istio. Mosn has support for TCP, HTTP, and RPC protocols such as SOFARPC.

MetalLB

MetalLB is another CNCF incubated project. It is a bare-metal implementation of the LoadBalancer network balancer resource type in Kubernetes. It is designed to bring the seamless experience of cloud network balancers to bare-metal Kubernetes deployments integrating with standard network equipment. For routing services, it uses standard routing protocols such as ARP, NDP, and BGP. It has a special Layer 2 load balancing mode that responds to ARP requests for specific services on a regular IPv4 Ethernet network. This implements a unique load-balancing system in which all Kubernetes service requests go to a single physical IP, from which they are spread across the service’s pods.

Envoy

Envoy is a high-performance distributed proxy written in C++. It can be used as an L4 or L7 load balancer, and is designed for building large distributed service meshes and microservices. It simplifies building observability in large-scale services with the ability to create an Envoy mesh of services, has native support for distributed tracing, and offers wire-level monitoring of MongoDB, DynamoDB, and others. There is first-class support for HTTP/2 and gRPC across the mesh, and Envoy provides APIs for real-time management of the service mesh.

Katran

Katran is a C++ library built and used by Facebook for building high-performance L4 load balancers. It offers high-performance load balancing by processing packets in the kernel, working much closer to the hardware than other load balancers, and scales linearly with the networking hardware’s capability. As an L4 load balancer, Katran is more resilient in the face of network-related issues, and so can be useful for scaling L7 load balancers.

Final thoughts

The load-balancing algorithms offered are one factor in choosing the right load balancer for you, but most load balancers offer similar algorithms, including IP hashing, round-robin, randomized, etc. In a cloud-native world with large and distributed systems, it’s important to consider other capabilities and integrations. These could include support for building service meshes, container networking support, and exposed observability information such as metrics and traces in standard formats like OpenTracing.

If you're looking for an internal tooling platform to help manage your new load balancer, try using Airplane. With Airplane, you can transform scripts, queries, and more into custom workflows and UIs. The basic building blocks of Airplane are Tasks, which are single or multi-step functions that anyone on your team can use. Airplane also offers Views, a React-based platform to build custom UIs.

To get started, sign up for a free account or book a demo.