As a Kubernetes developer, you’ve likely encountered the issue of pods being unexpectedly evicted. For users not familiar with the resource-management side of Kubernetes, troubleshooting and resolving the issue behind the eviction can be a daunting prospect.

Let’s take a look at what causes Kubernetes pod evictions, and then run through some examples and tips for troubleshooting these resource issues.

What causes pod evictions?

In Kubernetes, the most important resources used by pods are CPU, memory, and disk IO. Those can then be divided into compressible resources (CPU) and incompressible resources (memory and disk IO). Compressible resources can’t cause pods to be evicted because the system can limit pod CPU usage by reassigning weights when the pod’s CPU usage is high.

For incompressible resources, on the other hand, it’s not possible to continue requesting resources (running out of memory means running out of resources) if there just aren’t enough. So Kubernetes will evict a certain number of pods from the node to ensure that there are enough resources on the node.

When incompressible resources run low, Kubernetes use kubelets to evict pods. Each compute node’s kubelet monitors the node’s resource usage by capturing cAdvisor metrics. If you set the right parameters when deploying an application and know all the possibilities, you can better control your cluster.

What is a pod eviction?

A pod eviction is a characteristic function of Kubernetes used in certain scenarios, such as node NotReady, insufficient node resources, and expelling pods to other nodes. There are two eviction mechanisms in Kubernetes:

kube-controller-manager: Periodically checks the status of all nodes and evicts all pods on the node when the node is in NotReady state for more than a certain period.kubelet: Periodically checks the resources of the node and evicts some pods according to their priority when resources are insufficient.

Kube-controller-manager triggered eviction

kube-controller-manager checks the node status periodically. Whenever the node status is NotReady and the podEvictionTimeout time is exceeded, all pods on the node will be expelled to other nodes. The specific expulsion speed is also affected by expulsion speed parameters, cluster size, and so on.

The following startup parameters are provided to control eviction:

pod-eviction-timeout: After the NotReady state node exceeds a default time of five minutes, the eviction will be executed.node-eviction-rate: The drive rate, or the rate at which the node is driven.secondary-node-eviction-rate: When there are too many down nodes in the cluster, the corresponding drive rate is also reduced.unhealthy-zone-threshold: When the number of node downtimes in the zone exceeds 55 percent, and the zone is unhealthy.large-cluster-size-threshold: Determines whether the cluster is large. A cluster with over 50 (default) nodes is a large cluster.

Kubelet triggered eviction

kubelet periodically checks the memory and disk resources of the node. When the resources are lower than the threshold, the pod will be evicted according to the priority. The specific resources checked are:

- memory.available

- nodefs.available

- nodefs.inodesFree

- imagefs.available

- imagefs.inodesFree

As an example, when memory resources are lower than the threshold, the priority of eviction is roughly the same—BestEffort > Burstable > Guaranteed—though the specific order may be adjusted because of actual usage.

When an eviction occurs, kubelet supports two modes:

soft: Eviction after a period of reprieve.hard: Eviction immediately.

Why understanding pod evictions is important

Understanding the mechanisms behind your pod evictions is essential if you’re going to resolve resource issues quickly and prevent them from recurring.

For kubelet-initiated evictions, which often caused by insufficient resources, priority is given to evicting BestEffort-type containers. Those are mostly offline batch operations with low-reliability requirements. After the eviction, resources are released to relieve the pressure on the node, and the other containers on the node are protected by abandoning the node. A nice feature, both by design and in practice.

For evictions initiated by kube-controller-manager, the effect is questionable. Normally, the compute node reports its heartbeat to the master periodically, and if the heartbeat times out, the compute node is considered NotReady. kube-controller-manager initiates an eviction when the NotReady state reaches a certain time.

However, many scenarios can cause heartbeat timeouts, such as:

- Native bugs: The

kubeletprocess is completely blocked. - Mistake: Mistakenly stopping the

kubelet. - Infrastructure anomalies: Switch failure walkthrough, NTP anomalies, DNS anomalies, etc.

- Node failures: Hardware damage, power loss, etc.

From a practical point of view, the probability of a heartbeat timeout caused by a real compute node failure is very low. Instead, the probability of a heartbeat timeout caused by native bugs or infrastructure anomalies is much higher, resulting in unnecessary evictions.

Ideally, evictions have little impact on stateless and well-designed business parties. But not all business parties are stateless, and not all business parties optimize their business logic for Kubernetes. For example, for stateful businesses, if there is no shared storage, a pod rebuilt offsite loses all its data; even if data is not lost, for MySQL-like applications, a double-write will heavily damage the data.

For services that care about the IP layer, the IP of the pod after offsite reconstruction often changes. Although some business parties can use service and DNS to solve the problem, it introduces additional modules and complexity.

Unless the following requirements are met, please try to turn off the eviction feature of kube-controller-manager, set the timeout for eviction to be very long, and set the speed of primary/secondary eviction to 0. Otherwise, it’s very easy to cause major and minor failures, a lesson learned from blood and tears.

Pod evictions troubleshooting process

Let’s imagine a hypothetical scenario: a normal Kubernetes cluster with three worker nodes, Kubernetes version v1.19.0. Some pods running on worker 1 are found to be evicted.

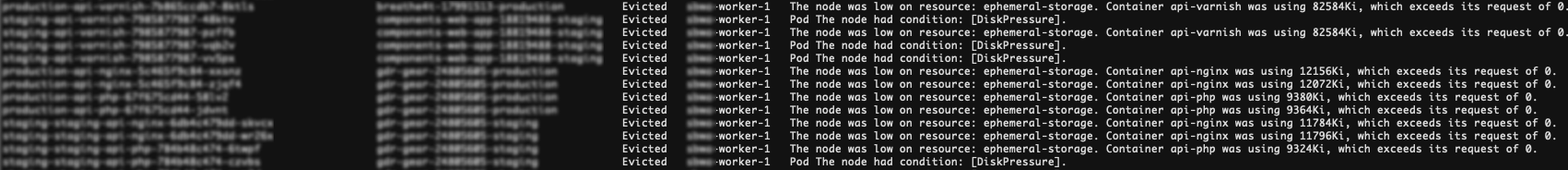

From the previous screenshot, you can see that many pods are evicted and the error message is clear. The node was low on resource: ephemeral-storage. There isn’t enough local storage on the node, causing the kubelet to trigger the eviction process. See here for an introduction to node-pressure eviction in Kubernetes.

Let’s tackle some troubleshooting tips for this scenario.

Enable automatic scaling

Either manually add a worker to the cluster or deploy cluster-autoscaler to automatically scale according to the set conditions. Of course, you can also just increase the local storage space of the worker, which involves resizing the VM and will cause the worker node to be temporarily unavailable.

Protect some critical apps

Specify resource requests and limits in their manifest and give the pods Guaranteed QoS class. At least these pods won’t be affected when the kubelet triggers an eviction.

The above measures ensure the availability of some critical applications to some extent. If the pods are not evicted when the node is defective, you’ll need to walk through a few more steps to find the fault.

The command result reveals that many pods have evicted status after running kubectl get pods. The results of the check will be saved in the node’s kubelet logs.

To find the information, run cat /var/paas/sys/log/kubernetes/kubelet.log | grep -i Evicted -C3.

Check the pod’s tolerances

To describe how long a pod is linked to a failed or unresponsive node, use the tolerationSeconds property. The toleration you set for that pod might look like:

Examine conditions for preventing pod eviction

The pod eviction will be suspended if the number of nodes in a cluster is less than 50 and the number of faulty nodes amounts to over 55 percent of the total nodes. Kubernetes will try to evict the workload of the malfunctioning node in this situation.

The following JSON format, for example, describes a healthy node:

The node controller performs API-initiated eviction for all pods allocated to that node if the Ready condition status stays Unknown or False for longer than the pod-eviction-timeout (an input supplied to the kube-controller-manager).

Verify the container’s allocated resources

A container is evicted by a node based on the node’s resource usage. The evicted container is scheduled based on the node resources that have been assigned to it. Different rules govern eviction and scheduling. As a result, an evicted container may be rescheduled to the original node. Therefore, properly allocate resources to each container.

Check if the pod fails regularly

If pods in that node are also being evicted after a pod is evicted and scheduled to a new node, the pod will be evicted again. Pods can be evicted several times.

A pod in the Terminating state is left if the eviction is triggered by a kube-controller-manager. The pod is automatically destroyed after the node is restored. You can destroy the pod forcibly if the node has been deleted or cannot be restored for other reasons.

If kubelet triggers the eviction, a pod will be left in the Evicted state. It is solely used to locate later faults and can be deleted directly.

To delete the evicted pods, run the following command:

When Kubernetes drives out the pod, Kubernetes will not recreate the pod. If you want to recreate the pod, you need to use replicationcontroller, replicaset, and deployment mechanisms. If you directly create a pod, the pod is directly deleted and will not be rebuilt.

With mechanisms such as replicationcontroller, if one pod is missing, these controllers will recreate a pod.

Conclusion

With a better understanding of how and when pods are evicted, it’s simpler to balance your cluster. Specify the correct parameters for your component, grasp all the choices available, and understand your workload, and enjoy managing your Kubernetes resources effectively.

If you're looking for a better way to monitor and troubleshoot your Kubernetes resources, Airplane is a good solution. Airplane allows you to transform scripts, queries, APIs, and more into powerful workflows and UIs. With Airplane, you can build a custom internal dashboard within minutes that helps you manage and fix issues that arise in your applications in real time.

Airplane is also code-based: everything you build can be integrated with your existing codebase, version controlled, and integrated with third parties.

To build your first UI to streamline your troubleshooting process, sign up for a free account or book a demo.