Over the years, containerization has helped a number of companies achieve workload portability for their applications. However, despite the benefits that containers provide, they fall short when it comes to automating orchestration tasks. This gap is what made room for Kubernetes.

Kubernetes is a container orchestration platform that automatically and optimally manages containers and their infrastructure for better availability and scalability. The Kubernetes architecture consists of three main planes:

- The control plane. The brain behind all the operations of a Kubernetes cluster. The control plane consists of some core components such as the API server, the Scheduler, and the Controller manager.

- The data plane. The storage or memory for your Kubernetes cluster. A common implementation of the data plane is a highly available etcd database that stores all of your cluster’s configurations.

- The worker plane. Responsible for running the actual workloads based on the declared configurations sent to the API server in the control plane.

Together, these planes make up a Kubernetes cluster. Your Kubernetes cluster, that is all three planes, can exist either on a single device such as a Raspberry Pi or be distributed across multiple VMs in the cloud (ex GKE, EKS, AKS) or in an on-premises data center.

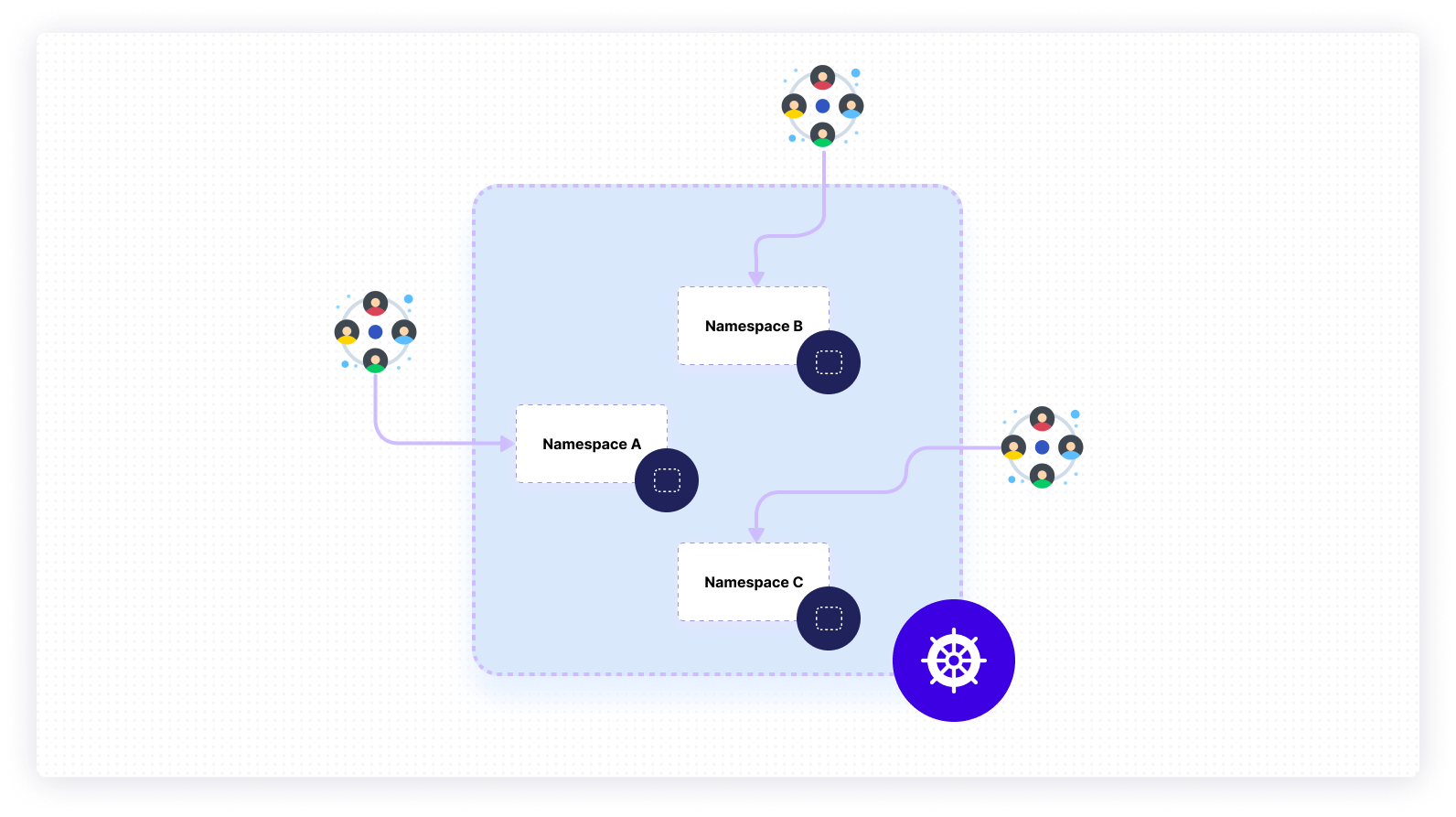

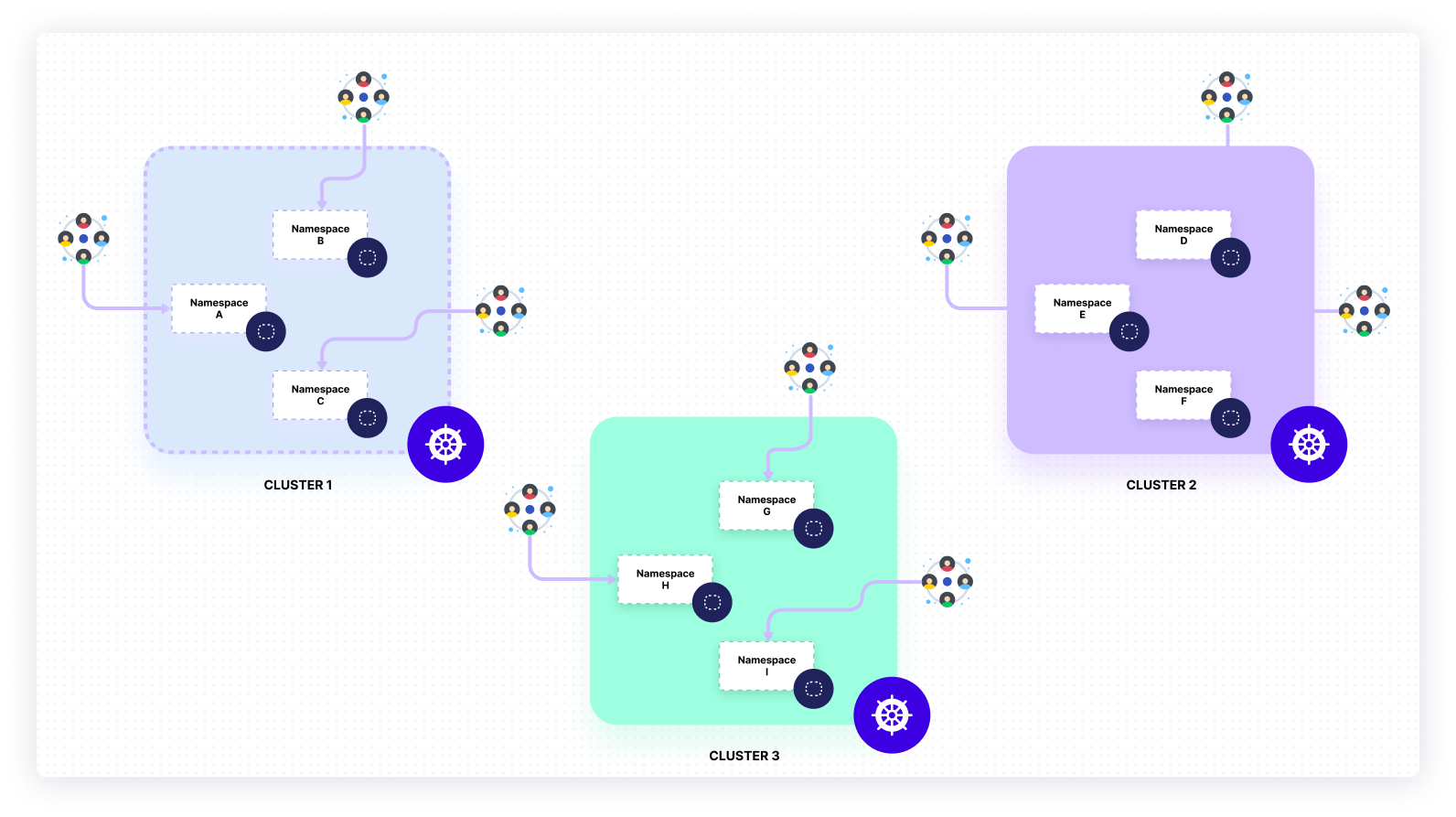

Managing a single Kubernetes cluster is a complex task on its own, yet there are instances where your architecture may consist of multiple clusters. The cluster model you opt for will depend on a number of requirements specific to your organization’s objectives.

This article will explore how some factors, namely developer experience, scalability, security, resilience, and cost, can provide a framework for deciding whether to use a single cluster or a multi-cluster approach.

The developer experience

Kubernetes application development means a lot of configuration management with manifest files and Helm charts. Deploying an application to a Kubernetes cluster typically involves working with multiple resources such as pods, deployments, services, ingresses, ConfigMaps, secrets, and more.

Each of these plays a specific role in how the application is deployed, how it behaves in a given environment, and how it’s exposed from a networking perspective. Software teams may also choose to make use of a fault-tolerant and declarative mechanism such as GitOps for deploying their applications to a cluster.

Single cluster

Working with a single cluster generally means easier management of user authentication, Kubernetes version upgrades, cluster visibility, node management, and application deployments with CI/CD.

Security, however, is a serious concern because of the lack of isolation as a default. This presents more work for developers to ensure that workloads are sufficiently separate from each other. Furthermore, developers will have a lot more restricted access with RBAC (Role Based Access Control) to ensure safety and protection from affecting other critical workloads on the same cluster.

Multiple clusters

A multi-cluster model offers more strict isolation at a cluster level by default. This approach offers software teams more freedom within a specific cluster they have access to. In addition, these teams will have a better developer experience from a resource utilization perspective—fewer workloads are competing for resources. Multiple clusters have far better scaling capabilities, which will reduce scaling impediments for developers.

On the other hand, managing multiple Kubernetes clusters does introduce additional management overhead in a number of areas. Software teams have to ensure alignment and consistency in user authentication and authorization, Kubernetes cluster versions, security standards and policies, workload deployments, and cluster visibility such as monitoring and logging.

Scalability

Scaling applications and their infrastructure in Kubernetes can be accomplished with one of three objects, each with its own purpose:

- The Horizontal Pod Autoscaler. Scales applications out (increases or decreases the number of pods) based on configured metric utilization.

- The Vertical Pod Autoscaler. Scales applications up (increases or decreases the CPU or memory of pods) by recommending resource allocations and optionally adjusting those resources for a given deployment.

- The Cluster Autoscaler. Increases or decreases the size of your cluster by modifying the number of nodes in your node pools based on the presence of pending pods and node utilization metrics.

Single cluster

A single cluster configured with the resources detailed can meet scaling requirements to a certain degree. However, it will result in increased resource consumption; the cluster will have to manage the responsibility of handling all the scaling requirements for running workloads.

Multiple clusters

In contrast, having multiple Kubernetes clusters means that workloads can be distributed across different clusters that can scale according to their specific load. Furthermore, a multi-cluster model allows software teams to distribute their application across different regions which exponentially increases their availability.

Security

Single cluster

Running your workloads on a single or shared Kubernetes cluster can present a number of security risks.

By default, the networking model that Kubernetes operates on allows all pods, each with a distinct IP address, to have permissive ingress and egress traffic across the board, regardless of the node or device they’ve been scheduled on. This means unrelated applications can potentially interact with each other.

If you have a single application with multiple environments on the same cluster, this can still pose a serious threat to your production environment that coexists as a namespace with other environments.

Also, the more users you have accessing a single cluster, the more risks there are for individuals or teams to execute misconfigurations that will impact the cluster.

In order to achieve some measure of isolation from your workloads, you will have to make use of pod security policies and network policies to control traffic behavior between pods.

Furthermore, you can enable RBAC to control permissions for users accessing the cluster, as well as the pods running on the cluster.

Multiple clusters

In contrast to a single cluster model, running your workloads across multiple clusters with a cluster per application or cluster per environment paradigm provides a hard level of isolation. The risk of unrelated applications, or applications with multiple environments, interacting with each other in unintended ways is drastically reduced.

The number of users interacting with a single cluster in a multi-cluster model is fewer, which reduces the chances of breaking things with cluster-wide ramifications.

Resilience

Single cluster

As you would expect, having a single Kubernetes cluster for all your workloads has a high risk of a single point of failure. Several issues may arise and impact your cluster, such as:

- Failure or unavailability of underlying hosts

- Incorrect configuration applied to cluster-wide resources

- Side effects of a failed Kubernetes upgrade

- A cluster-wide component like a CNI plugin failed

Any one of these occurrences can have cluster-wide implications that will affect all running workloads.

Multiple clusters

A multi-cluster model is more resilient because of its distributed architecture. Workloads can be split across multiple regions or sites, which supports continued availability and better recovery when something goes wrong. The blast radius of misconfigurations or infrastructure issues are limited to the scope of a single cluster in the entire architecture. As a result, workloads from unaffected clusters remain available.

Cost

Single cluster

A single Kubernetes cluster provides more efficient resource usage for the nodes in the different planes of the cluster. If you have a single shared cluster, the workloads running in the different namespaces can also share resources that can operate at a cluster-wide scope.

Multiple clusters

Multiple clusters incur additional resource overhead compared to operating a single Kubernetes cluster. With this model, your overall architecture will consist of more nodes, which will increase your costs.

Each cluster you run in a highly available setup will have a minimum of two nodes for the control plane and three for the etcd databases. Your worker plane will consist of a varying number of nodes depending on your workload needs and scaling events. Furthermore, you have to consider the increased number of load balancers, ingresses, logging, and monitoring resources involved in maintaining the scalability, availability, and management of each cluster.

Whether you run your clusters in the cloud or on-premise data centers, these factors will have cost implications.

Final thoughts

A Kubernetes cluster is a powerful means for ensuring the high availability of your container workloads, but the cluster model you choose to implement should suit the priorities of your particular use case. Single cluster models are a good solution for minimal management overhead and cost efficiency. If, however, resilience, workload isolation, and security are more important factors, then having a multi-cluster model would be more suitable for you.

If you're looking to build a dashboard to monitor your Kubernetes clusters in an efficient manner, then take a look at Airplane. Airplane is the developer platform for building custom internal tools. Airplane is a performant maintenance-free platform that makes it easy to build custom UIs that can help you manage your clusters in real-time. Airplane's engineering workflows solution helps you build powerful internal tools for your most critical engineering-centric use cases.

To build your first Kubernetes monitoring dashboard in minutes, sign up for a free account or book a demo.